Immediately, we’re excited to announce the final availability of Characteristic Serving. Options play a pivotal position in AI Functions, usually requiring appreciable effort to be computed precisely and made accessible with low latency. This complexity makes it more durable to introduce new options to enhance the standard of functions in manufacturing. With Characteristic Serving, now you can simply serve pre-computed options in addition to compute on-demand options utilizing a single REST API in actual time on your AI functions, with out the effort of managing any infrastructure!

We designed Characteristic Serving to be quick, safe and simple to make use of and supplies the next advantages:

- Quick with low TCO – Characteristic Serving is designed to offer excessive efficiency at low TCO, capable of serve options inside milliseconds latency

- Characteristic Chaining – Specifying chains of pre-computed options and on-demand computations, making it simpler to specify the calculation of advanced real-time options

- Unified Governance – Customers can use their current safety and governance insurance policies to handle and govern their Information and ML property

- Serverless – Characteristic Serving leverages On-line Tables to remove the necessity to handle or provision any sources

- Cut back training-serving skew – Be certain that options utilized in coaching and inference have gone by precisely the identical transformation, eliminating widespread failure modes

On this weblog, we’ll stroll by the fundamentals of Characteristic Serving, share extra particulars in regards to the simplified consumer journey with Databricks On-line Tables, and talk about how clients are already utilizing it in numerous AI use instances.

What’s Characteristic Serving?

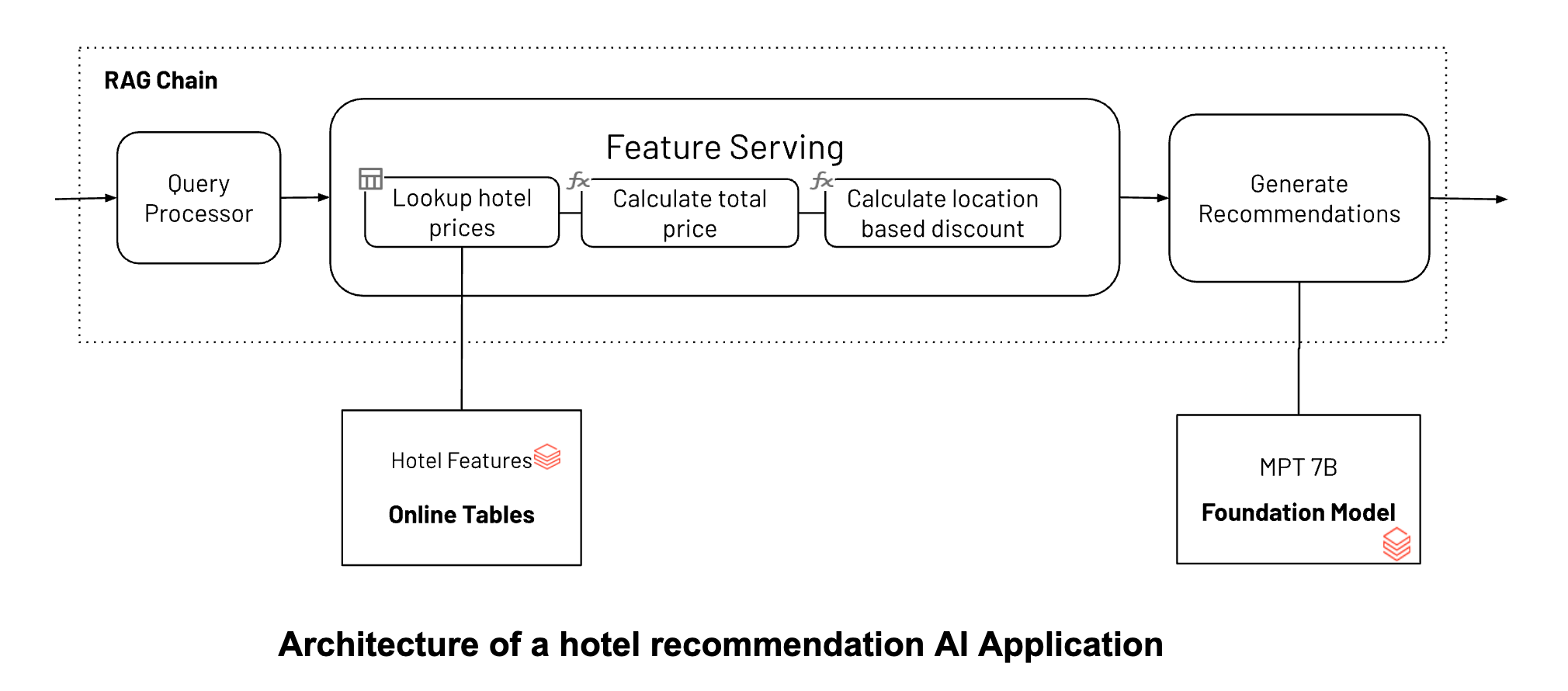

Characteristic Serving is a low latency, real-time service designed to serve pre-computed and on-demand ML options to construct real-time AI functions like customized suggestions, customer support chatbots, fraud detection, and compound Gen AI methods. Options are transformations of uncooked information which might be used to create significant indicators for the Machine Studying fashions.

Within the earlier weblog publish, we talked about three forms of characteristic computation architectures: Batch, Streaming and On-demand. These characteristic computation architectures result in two classes of options:

- Pre-computed options – Computed in batch or streaming, pre-computed options could be calculated forward of prediction request and saved in an offline Delta Desk in Unity Catalog for use in coaching of a mannequin and served on-line for inference

- On-demand options – For options which might be solely computable on the time of inference, i.e., concurrently the request to the mannequin, the efficient information freshness requirement is “instant”. These options are usually computed utilizing context from the request resembling the true time location of a consumer, pre-computed options or a chained computation of each.

Characteristic Serving (AWS | Azure) makes each forms of options out there in milliseconds of latency for actual time AI functions. Characteristic Serving can be utilized in a wide range of use instances:

- Suggestions – customized advice with actual time context-aware options

- Fraud Detection – Establish and monitor fraudulent transactions with actual time indicators

- RAG functions – delivering contextual indicators to a RAG software

Prospects utilizing Characteristic Serving have discovered it simpler to give attention to enhancing the standard of their AI functions by experimenting with extra options with out worrying in regards to the operational overhead of creating them accessible to their AI functions.

Databricks Characteristic Serving’s straightforward on-line service setup made it straightforward for us to implement our advice system for our shoppers. It allowed us to swiftly transition from mannequin coaching to deploying customized suggestions for all clients. Characteristic Serving has allowed us to offer our clients with extremely related suggestions, dealing with scalability effortlessly and guaranteeing manufacturing reliability. This has allowed us to give attention to our fundamental specialty: customized suggestions!

—Mirina Gonzales Rodriguez, Information Ops Tech Lead at Yape Peru

Native Integration with Databricks Information Intelligence Platform

Characteristic Serving is natively built-in with Databricks Information Intelligence Platform and makes it straightforward for ML builders to make pre-computed options saved in any Delta Desk accessible with milliseconds of latency utilizing Databricks On-line Desk (AWS | Azure) (at the moment in Public Preview). This supplies a easy answer that doesn’t require you to keep up a separate set of knowledge ingestion pipelines to make your options out there on-line and continuously up to date.

Databricks automated characteristic lookup and real-time computation in mannequin serving has reworked our liquidity information administration to enhance the fee expertise of a mess of consumers. The mixing with On-line Tables permits us to precisely predict account liquidity wants primarily based on dwell market information factors, streamlining our operations inside the Databricks ecosystem. Databricks On-line Tables supplied a unified expertise with out the necessity to handle our personal infrastructure in addition to decreased latency for actual time predictions!

—Jon Wedrogowski, Senior Supervisor Utilized Science at Ripple

Let’s take a look at an instance of a lodge advice chatbot the place we create a Characteristic Serving endpoint in 4 easy steps and use it to allow actual time filtering for the applying.

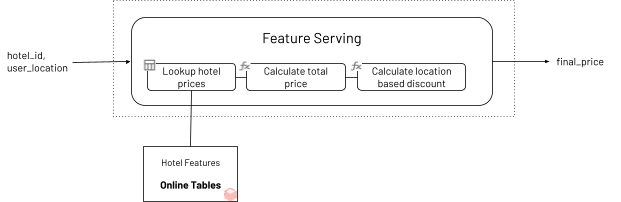

The instance assumes that you’ve got a delta desk inside Unity Catalog referred to as fundamental.journey.hotel_prices with pre-computed offline characteristic in addition to a operate referred to as fundamental.journey.compute_hotel_total_prices registered in Unity Catalog that computes the whole worth with low cost. You possibly can see particulars on the right way to registering on-demand features right here (AWS|Azure).

Step 1. Create a web-based desk to question the pre-computed options saved in fundamental.journey.hotel_prices utilizing the UI or our REST API/SDK (AWS|Azure)

from databricks.sdk import WorkspaceClient

from databricks.sdk.service.catalog import *

w = WorkspaceClient()

# Create a web-based desk

spec = OnlineTableSpec(

primary_key_columns = ["hotel_id"],

source_table_full_name = "fundamental.journey.hotel_prices",

run_triggered=OnlineTableSpecTriggeredSchedulingPolicy.from_dicr({'triggered': 'true'}),

perform_full_copy=True)

w.online_tables.create(title='fundamental.journey.hotel_prices_online', spec=spec)Step 2. We create FeatureSpec specifying the ML indicators you need to serve within the Characteristic Serving endpoint (AWS | Azure).

The FeatureSpec could be composed of:

- Lookup of pre-computed information

- Computation of an on-demand characteristic

- Chained featurization utilizing each pre-computed and on-demand options

Right here we need to calculate a chained characteristic by first wanting up pre-compute lodge costs, then calculating whole worth primarily based on low cost for the consumer’s location and variety of days for the keep.

from databricks.feature_engineering import FeatureLookup, FeatureFunction

options=[

FeatureLookup(

table_name="main.travel.hotel_prices",

lookup_key="hotel_id"

),

FeatureFunction(

udf_name="main.travel.compute_hotel_total_prices",

output_name="distance",

input_bindings={

"hotel_price": "hotel_price",

"num_days": "num_days",

"user_latitude": "user_latitude",

"user_longitude": "user_longitude"

},

),

]

feature_spec_name = "fundamental.journey.hotel_price_spec"

fe.create_feature_spec(title=feature_spec_name, options=options, exclude_columns=None)Step 3. Create a Characteristic Serving Endpoint utilizing the UI or our REST API/SDK (AWS | Azure).

Our serverless infrastructure mechanically scales to your workflows with out the necessity to handle servers on your lodge options endpoint. This endpoint will return options primarily based on the FeatureSpec in step 2 and the information fetched out of your on-line desk arrange in Step 1.

from databricks.sdk import WorkspaceClient

from databricks.sdk.service.serving import EndpointCoreConfigInput, ServedEntityInput

# Wait till the endpoint is prepared

workspace.serving_endpoints.create_and_wait(

title="hotel-price-endpoint",

config = EndpointCoreConfigInput(

served_entities=[

ServedEntityInput(

entity_name="main.travel.hotel_price_spec",

scale_to_zero_enabled=True,

workload_size="Small"

)]

)

)Step 4. As soon as the Characteristic Serving endpoint is prepared, you possibly can question utilizing major keys and context information utilizing the UI or our REST API/SDK

import mlflow.deployments

shopper = mlflow.deployments.get_deploy_client("databricks")

response = shopper.predict(

endpoint=endpoint_name,

inputs={

"dataframe_records": [

{"hotel_id": 1, "num_days": 10, "user_latitude": 598, "user_longitude": 280},

{"hotel_id": 2, "num_days": 10, "user_latitude": 598, "user_longitude": 280},

]

},

)Now you should use the brand new Characteristic Serving endpoint in constructing a compound AI system by retrieving indicators of whole worth to assist a chatbot suggest customized outcomes to the consumer.

Getting Began with Databricks Characteristic Serving

Signal-up to the GenAI Payoff in 2024: Construct and deploy manufacturing–high quality GenAI Apps Digital Occasion on 3/14