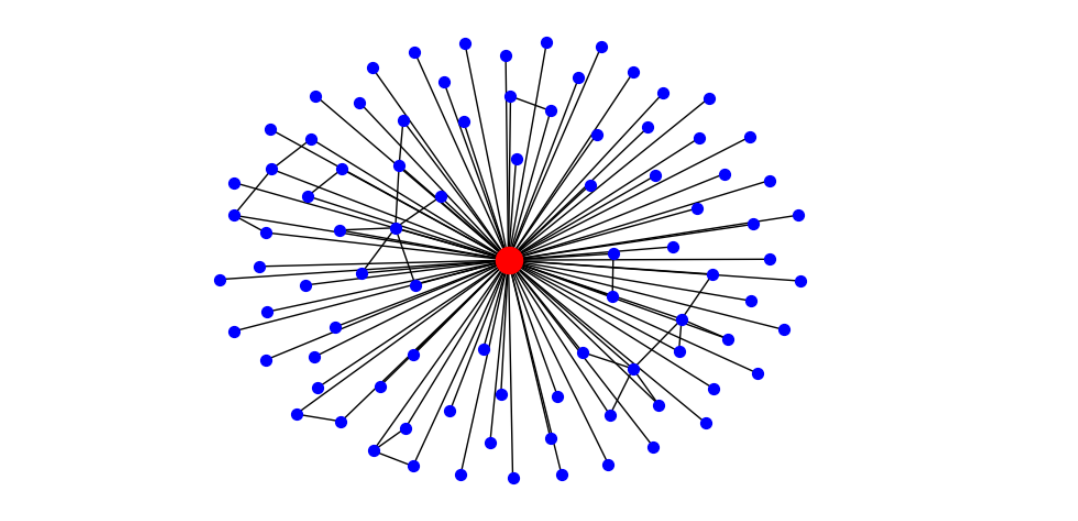

Ego Graph algorithm (supply: NetworkX.org)

Nvidia has expanded its help of NetworkX graph analytic algorithms in RAPIDS, its open supply library for accelerated computing. The enlargement means knowledge scientists can run 40-plus NetworkX algorithms on Nvidia GPUs with out altering the Python code, probably lowering processing time by hours on powerful graph issues.

Initially launched by Los Alamos Nationwide Laboratory 19 years in the past, NetworkX is an open supply assortment of Python-based algorithms “for the creation, manipulation, and examine of the construction, dynamics, and capabilities of advanced networks,” because the NetworkX web site explains. It’s notably good at tackling massive scale graph issues, reminiscent of these encompassing 10 million or extra nodes and 100 million or extra edges.

Nvidia has been supporting NetworkX in RAPIDS since November, when it held its AI and Information Science Summit. The corporate began out solely supporting three NetworkX algorithms at the moment, which restricted the usefulness of the library. This month, the corporate is opening up its help by bringing greater than 40 NetworkX algorithms into the RAPIDS fold.

Nvidia clients who want the processing scale of GPUs to resolve their graph issues will recognize the addition of the algorithms into RAPIDS, says Nick Becker, a senior technical product supervisor with Nvidia.

‘We’ve been working with the NetworkX group [and the core development team] for some time on making it attainable to [have] zero code-change acceleration,” Becker tells Datanami. “Simply configure NetworkX to make use of the Rapids backend, and hold your code the identical. Identical to we did with Pandas, you possibly can hold your code the identical. Inform your Python to make use of this separate backend, and it’ll fall again to the CPU.”

Graph algorithms can clear up a sure class of related community issues extra effectively than different computational approaches. By treating a given piece of knowledge as a node that’s related (through edges) to different nodes, the algorithm can decide how related or comparable it’s to different items of knowledge (or nodes). Going out a single hop is akin to doing a SQL be a part of on relational knowledge. However going out three or extra nodes turns into too computationally costly to do utilizing the usual SQL technique, whereas the graph method scales extra linearly.

In the actual world, graph issues naturally crop up in issues like social media engagement, the place an individual (a node) can have many connections (or edges) to different folks. Fraud detection is one other basic graph analytic workload, with comparable topologies showing amongst teams of cybercriminals. With extra NetworkX algorithms supported in RAPIDS, knowledge scientists can convey the complete energy of GPU computing to bear on large-scale graph issues.

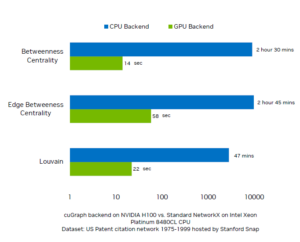

In keeping with Becker, the RAPIDS graph enlargement can scale back by hours the period of time to calculate large graph issues on social media knowledge.

“If I’m an information scientist, ready two hours to calculate betweenness centrality, which is the identify of an algorithm–that’s an issue, as a result of I want to do that in an exploratory method. I have to iterate,” he says. “Sadly, that’s simply not conducive to me doing that. However now I can take that very same workflow with my Nvidia-powered laptop computer or workstation or cloud node, and go from a few hours to only a minute.”

Nevertheless it’s not simply the large graph issues, Becker says, as the mix of NetworkX, RAPIDS, and GPU-accelerated {hardware} may also help clear up smaller scale issues too.

“That is vital even on a smaller scale,” he says. “I don’t should be fixing the world’s largest downside to be affected by the truth that my graph algorithm takes half-hour or an hour to run on only a medium-sized graph,” he says.

Betweeness centrality algorithm (Supply: NetworkX.org)

NetworkX isn’t the primary graph library Nvidia has supported with RAPIDS. The corporate has supplied cuGraph on RAPIDS because it launched again in 2018, Becker says. CuGraph remains to be a priceless software, notably as a result of it has superior capabilities like multi-node and multi-GPU functionality. “It’s bought all kinds of goodies,” the Nvidia product supervisor says.

However the adoption of NetworkX is far broader, with about 40 million month-to-month downloads and customers possible numbering over one million. Distributed below a BDS-new license, NetworkX is already a longtime a part of the PyData ecosystem. That current momentum means NetworkX help has the potential to considerably bolster adoption on RAPIDS for graph issues, Becker says.

“For those who’re simply utilizing NetworkX, you’ve by no means had a great way to, with out vital work, take your code and easily simply use that on a GPU,” he says. “That’s what we’re actually enthusiastic about, making it seamless.”

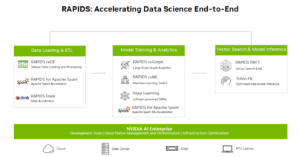

RAPIDS is a core part of Nvidia’s technique for accelerating knowledge science workloads. The library is essentially centered on supporting the PyData ecosystem, through issues like Pandas in addition to Scikiet-learn and XG Enhance for conventional machine studying. It additionally helps Apache Spark and Dask for large-scale computing challenges.

Nvidia lately added help for accelerating Spark machine studying (Spark ML) workloads to associate with its earlier help for dataframes and SQL Spark workloads, Becker says. It is also giving clients’ generative AI (GenAI) initiatives a lift with RAPIDS RAFT, which helps Meta’s Face library for comparable search and likewise Milvus, a vector database.

“RAPIDS’ aim, which we reiterated within the Information Science Summit…is that we need to meet knowledge scientists the place they’re,” Becker says. “And which means offering capabilities the place it’s attainable to do it inside these experiences in a seamless method. However generally it’s difficult to do this. It’s a piece in progress, and we need to allow folks to maneuver rapidly.”

RAPIDS will likely be one of many matters Nvidia covers at subsequent months’ GPU Expertise Convention (GTC), which is being held in individual for the primary time in 5 years. GTC takes place March 18 by 21 in San Jose, California. You may register at https://www.nvidia.com/gtc/.

Associated Gadgets:

Pandas on GPU Runs 150x Sooner, Nvidia Says

Spark 3.0 to Get Native GPU Acceleration

NVIDIA CEO Hails New Information Science Facility As ‘Starship of The Thoughts’