At this 12 months’s Worldwide Convention on Machine Studying (ICML2025), Jaeho Kim, Yunseok Lee and Seulki Lee gained an excellent place paper award for his or her work Place: The AI Convention Peer Evaluate Disaster Calls for Creator Suggestions and Reviewer Rewards. We hear from Jaeho concerning the issues they had been attempting to deal with, and their proposed creator suggestions mechanism and reviewer reward system.

May you say one thing about the issue that you simply handle in your place paper?

Our place paper addresses the issues plaguing present AI convention peer evaluation methods, whereas additionally elevating questions concerning the future route of peer evaluation.

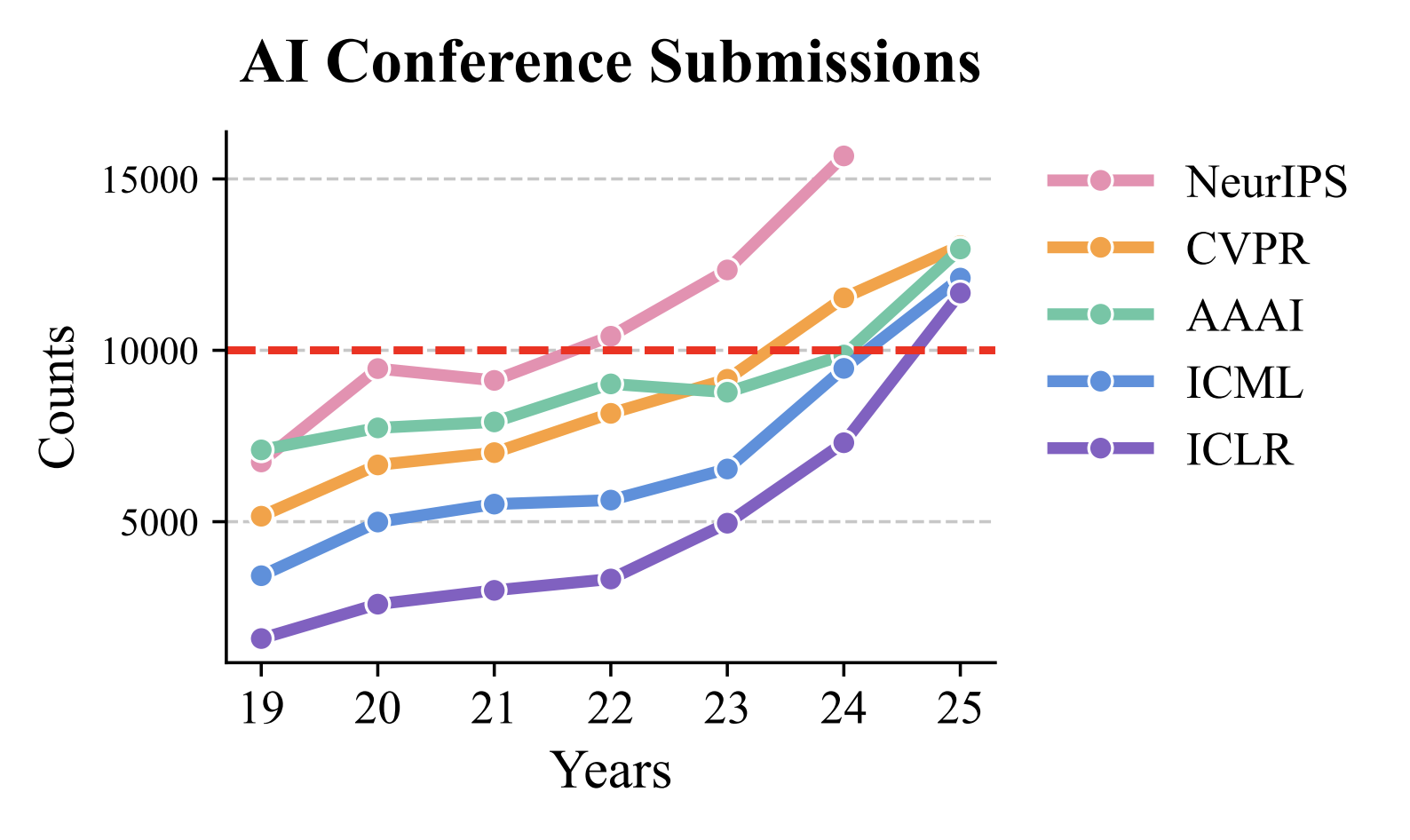

The upcoming drawback with the present peer evaluation system in AI conferences is the exponential development in paper submissions pushed by growing curiosity in AI. To place this with numbers, NeurIPS acquired over 30,000 submissions this 12 months, whereas ICLR noticed a 59.8% enhance in submissions in only one 12 months. This large enhance in submissions has created a elementary mismatch: whereas paper submissions develop exponentially, the pool of certified reviewers has not stored tempo.

Submissions to a number of the main AI conferences over the previous few years.

Submissions to a number of the main AI conferences over the previous few years.

This imbalance has extreme penalties. The vast majority of papers are now not receiving ample evaluation high quality, undermining peer evaluation’s important perform as a gatekeeper of scientific information. When the evaluation course of fails, inappropriate papers and flawed analysis can slip via, probably polluting the scientific document.

Contemplating AI’s profound societal influence, this breakdown in high quality management poses dangers that stretch far past academia. Poor analysis that enters the scientific discourse can mislead future work, affect coverage selections, and finally hinder real information development. Our place paper focuses on this important query and proposes strategies on how we will improve the standard of evaluation, thus main to raised dissemination of data.

What do you argue for within the place paper?

Our place paper proposes two main modifications to deal with the present peer evaluation disaster: an creator suggestions mechanism and a reviewer reward system.

First, the creator suggestions system permits authors to formally consider the standard of critiques they obtain. This technique permits authors to evaluate reviewers’ comprehension of their work, establish potential indicators of LLM-generated content material, and set up primary safeguards in opposition to unfair, biased, or superficial critiques. Importantly, this isn’t about penalizing reviewers, however moderately creating minimal accountability to guard authors from the small minority of reviewers who could not meet skilled requirements.

Second, our reviewer incentive system supplies each quick and long-term skilled worth for high quality reviewing. For brief-term motivation, creator analysis scores decide eligibility for digital badges (equivalent to “High 10% Reviewer” recognition) that may be displayed on educational profiles like OpenReview and Google Scholar. For long-term profession influence, we suggest novel metrics like a “reviewer influence rating” – basically an h-index calculated from the following citations of papers a reviewer has evaluated. This treats reviewers as contributors to the papers they assist enhance and validates their function in advancing scientific information.

May you inform us extra about your proposal for this new two-way peer evaluation technique?

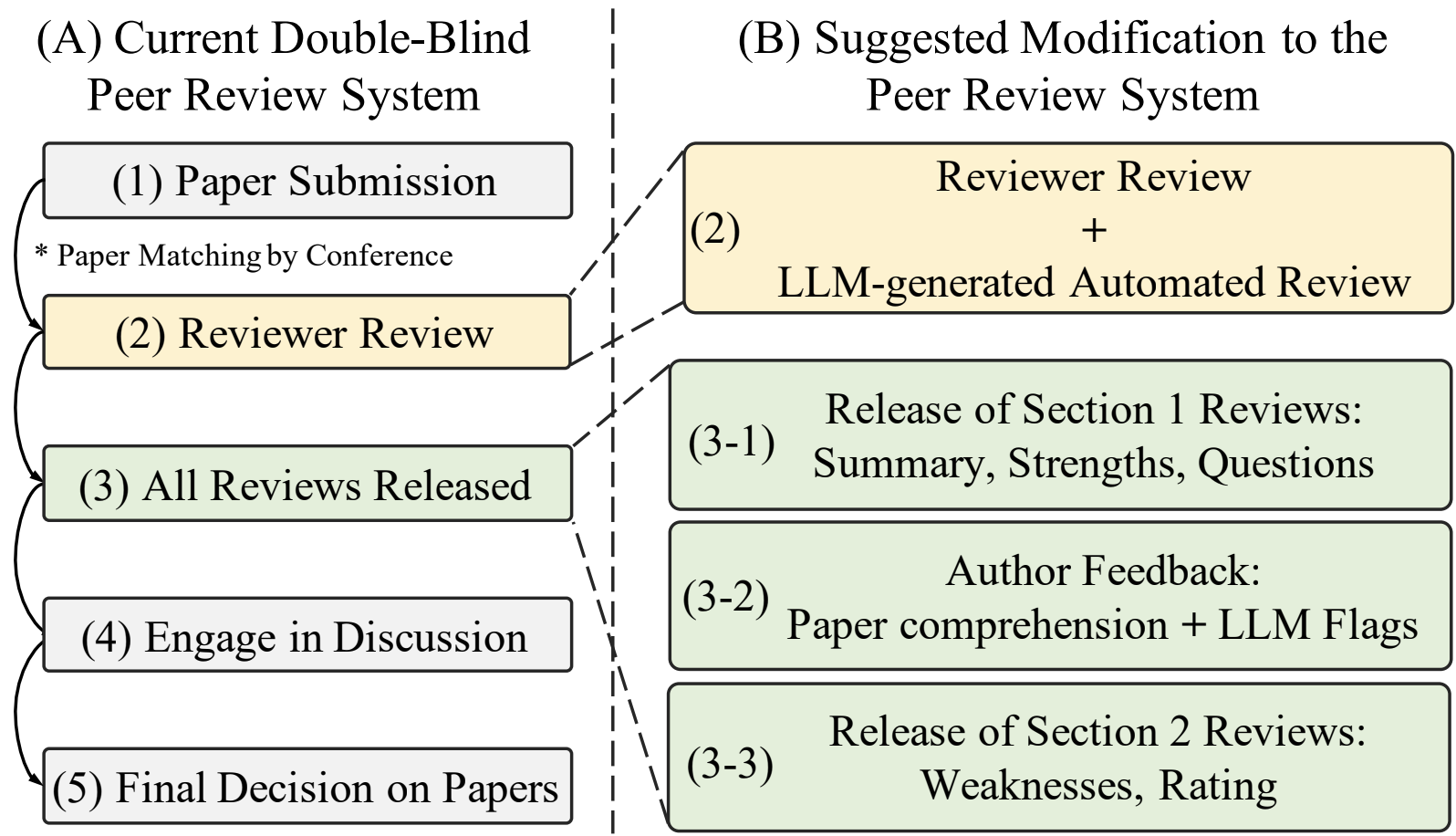

Our proposed two-way peer evaluation system makes one key change to the present course of: we cut up evaluation launch into two phases.

The authors’ proposed modification to the peer-review system.

The authors’ proposed modification to the peer-review system.

At the moment, authors submit papers, reviewers write full critiques, and all critiques are launched without delay. In our system, authors first obtain solely the impartial sections – the abstract, strengths, and questions on their paper. Authors then present suggestions on whether or not reviewers correctly understood their work. Solely after this suggestions will we launch the second half containing weaknesses and rankings.

This strategy provides three major advantages. First, it’s sensible – we don’t want to alter present timelines or evaluation templates. The second section might be launched instantly after the authors give suggestions. Second, it protects authors from irresponsible critiques since reviewers know their work might be evaluated. Third, since reviewers usually evaluation a number of papers, we will monitor their suggestions scores to assist space chairs establish (ir)accountable reviewers.

The important thing perception is that authors know their very own work greatest and may rapidly spot when a reviewer hasn’t correctly engaged with their paper.

May you speak concerning the concrete reward system that you simply counsel within the paper?

We suggest each short-term and long-term rewards to deal with reviewer motivation, which naturally declines over time regardless of beginning enthusiastically.

Brief-term: Digital badges displayed on reviewers’ educational profiles, awarded primarily based on creator suggestions scores. The objective is making reviewer contributions extra seen. Whereas some conferences checklist prime reviewers on their web sites, these lists are laborious to search out. Our badges can be prominently displayed on profiles and will even be printed on convention title tags. Instance of a badge that would seem on profiles.

Instance of a badge that would seem on profiles.

Lengthy-term: Numerical metrics to quantify reviewer influence at AI conferences. We propose monitoring measures like an h-index for reviewed papers. These metrics could possibly be included in educational portfolios, just like how we at the moment monitor publication influence.

The core concept is creating tangible profession advantages for reviewers whereas establishing peer evaluation as knowledgeable educational service that rewards each authors and reviewers.

What do you assume could possibly be a number of the professionals and cons of implementing this method?

The advantages of our system are threefold. First, it’s a very sensible resolution. Our strategy doesn’t change present evaluation schedules or evaluation burdens, making it simple to include into present methods. Second, it encourages reviewers to behave extra responsibly, realizing their work might be evaluated. We emphasize that the majority reviewers already act professionally – nonetheless, even a small variety of irresponsible reviewers can critically harm the peer evaluation system. Third, with enough scale, creator suggestions scores will make conferences extra sustainable. Space chairs may have higher details about reviewer high quality, enabling them to make extra knowledgeable selections about paper acceptance.

Nevertheless, there’s robust potential for gaming by reviewers. Reviewers may optimize for rewards by giving overly constructive critiques. Measures to counteract these issues are positively wanted. We’re at the moment exploring options to deal with this difficulty.

Are there any concluding ideas you’d like so as to add concerning the potential future

of conferences and peer-review?

One rising pattern we’ve noticed is the growing dialogue of LLMs in peer evaluation. Whereas we imagine present LLMs have a number of weaknesses (e.g., immediate injection, shallow critiques), we additionally assume they may ultimately surpass people. When that occurs, we’ll face a elementary dilemma: if LLMs present higher critiques, why ought to people be reviewing? Simply because the speedy rise of LLMs caught us unprepared and created chaos, we can not afford a repeat. We should always begin getting ready for this query as quickly as doable.

About Jaeho

| Jaeho Kim is a Postdoctoral Researcher at Korea College with Professor Changhee Lee. He acquired his Ph.D. from UNIST beneath the supervision of Professor Seulki Lee. His major analysis focuses on time sequence studying, notably creating basis fashions that generate artificial and human-guided time sequence knowledge to scale back computational and knowledge prices. He additionally contributes to enhancing the peer evaluation course of at main AI conferences, along with his work acknowledged by the ICML 2025 Excellent Place Paper Award. |

Learn the work in full

Place: The AI Convention Peer Evaluate Disaster Calls for Creator Suggestions and Reviewer Rewards, Jaeho Kim, Yunseok Lee, Seulki Lee.

AIhub

is a non-profit devoted to connecting the AI neighborhood to the general public by offering free, high-quality info in AI.

AIhub

is a non-profit devoted to connecting the AI neighborhood to the general public by offering free, high-quality info in AI.