Generative AI has opened new worlds of potentialities for companies and is being emphatically embraced throughout organizations. In accordance with a latest MIT Tech Overview report, all 600 CIOs surveyed said they’re growing their funding in AI, and 71% are planning to construct their very own {custom} LLMs or different GenAI fashions. Nonetheless, many organizations might lack the instruments wanted to successfully develop fashions educated on their personal information.

Making the leap to Generative AI is not only about deploying a chatbot; it requires a reshaping of the foundational features of knowledge administration. Central to this transformation is the emergence of Knowledge Lakehouses as the brand new “trendy information stack.” These superior information architectures are important in harnessing the total potential of GenAI, enabling quicker, more cost effective, and wider democratization of knowledge and AI applied sciences. As companies more and more depend on GenAI-powered instruments and functions for aggressive benefit, the underlying information infrastructure should evolve to help these superior applied sciences successfully and securely.

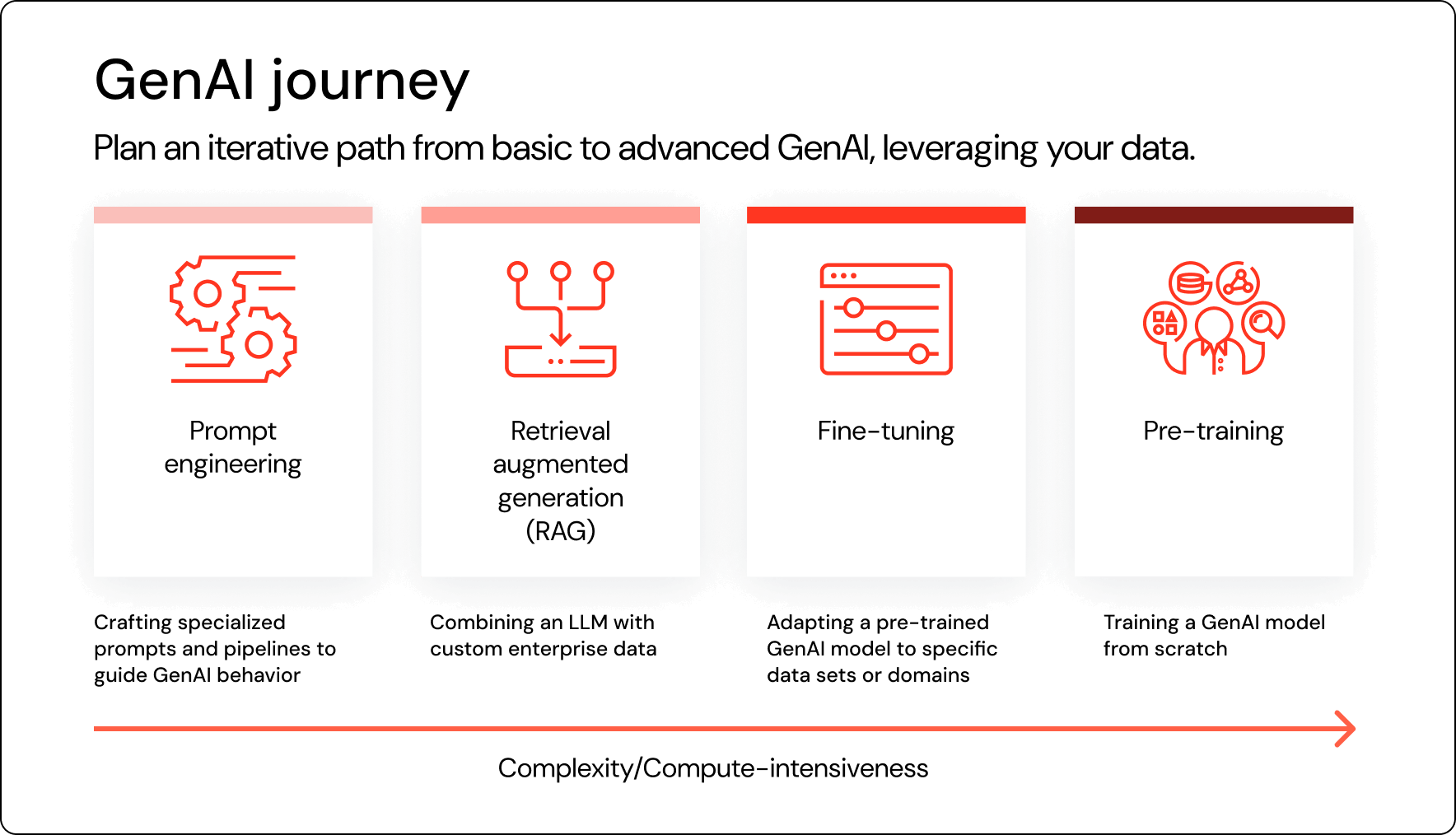

The Databricks Knowledge Intelligence Platform is an end-to-end platform that may help the complete AI lifecycle from ingestion of uncooked information, by means of mannequin customization, and finally to production-ready functions. It provides organizations extra management, engineering effectivity, and decrease TCO: full management over fashions and information by means of extra rigorous safety and monitoring; simpler skill to productionalize ML fashions with governance, lineage, and transparency; and lowered prices to coach an organization’s personal fashions. Databricks stands out as the only supplier able to providing these complete companies, together with immediate engineering, RAG, fine-tuning, and pre-training, particularly tailor-made to develop an organization’s proprietary fashions from the bottom up.

This weblog explains why corporations are utilizing Databricks to construct their very own GenAI functions, why the Databricks Knowledge Intelligence Platform is the perfect platform for enterprise AI, and easy methods to get began. Excited? We’re too! Subjects embody:

- How can my group use LLMs educated on our personal information to energy GenAI functions — and smarter enterprise choices?

- How can we use the Databricks Knowledge Intelligence Platform to fine-tune, govern, operationalize, and handle all of our information, fashions, and APIs on a unified platform, whereas sustaining compliance and transparency?

- How can my firm leverage the Databricks Knowledge Intelligence Platform as we progress alongside the AI maturity curve, whereas absolutely leveraging our proprietary information?

GenAI for Enterprises: Leveraging AI with Databricks Knowledge Intelligence Platform

Why use a Knowledge Intelligence Platform for GenAI?

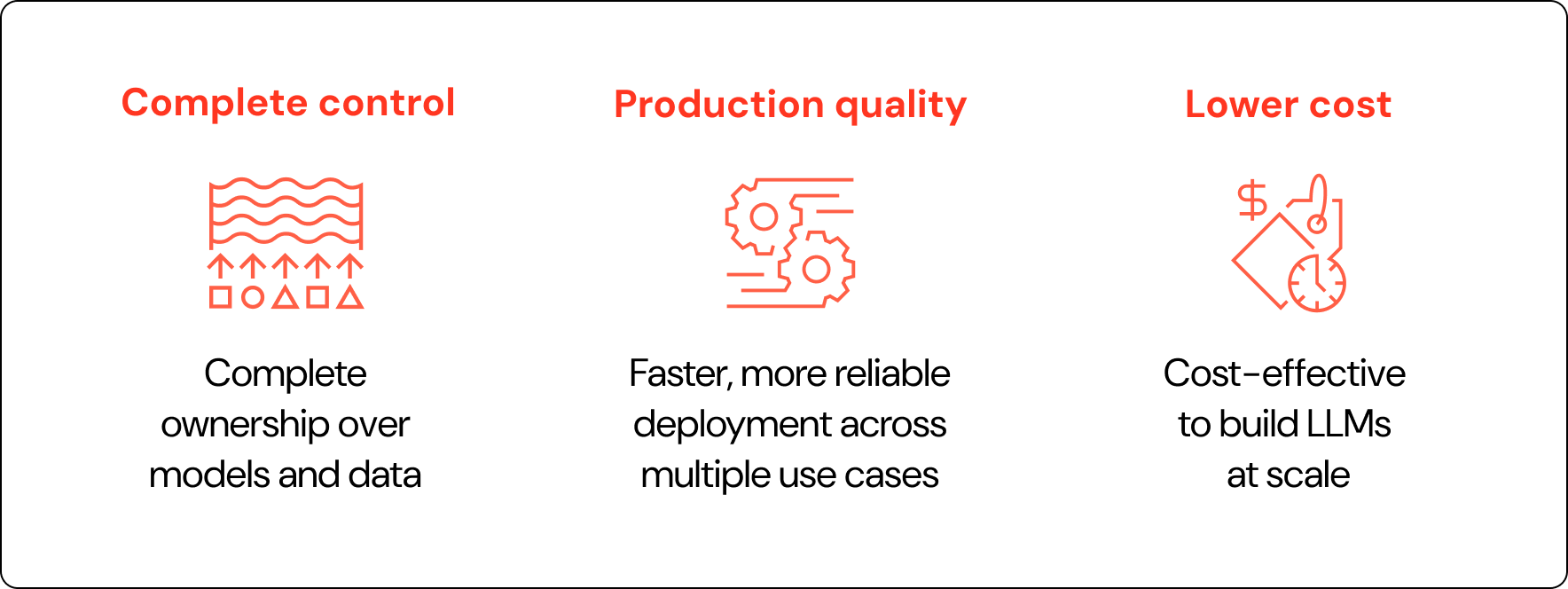

Knowledge Intelligence Platforms allow you to keep trade management with differentiated functions constructed utilizing GenAI instruments. The advantages of utilizing a Knowledge Intelligence Platform embody:

- Full Management: Knowledge Intelligence Platforms allow your group to make use of your individual distinctive enterprise information to construct RAG or {custom} GenAI options. Your group has full possession over each the fashions and the info. You even have safety and entry controls, guaranteeing that customers who shouldn’t have entry to information received’t get it.

- Manufacturing Prepared: Knowledge Intelligence Platforms have the power to serve fashions at a large scale, with governance, repeatability, and compliance inbuilt.

- Value Efficient: Knowledge Intelligence Platforms present most effectivity for information streaming, permitting you to create or finetune LLMs custom-tailored to your area, in addition to leverage essentially the most performant and cost-efficient LLM serving and coaching frameworks.

Due to Knowledge Intelligence Platforms, your enterprise can reap the benefits of the next outcomes:

- Clever Knowledge Insights: your enterprise choices are enriched by means of using ALL of your information belongings: structured, semi-structured, unstructured, and streaming. In accordance to the MIT Tech Overview report, as much as 90% of an organization’s information is untapped. The extra diversified the info (suppose PDFs, Phrase docs, photographs, and social media) used to coach a mannequin, the extra impactful the insights could be. Figuring out what information is being accessed and the way ceaselessly elucidates what’s most beneficial, and what information stays untapped.

- Area-specific customization: LLMs are constructed in your trade’s lingo and solely on information you select to ingest. This lets your LLM perceive domain-specific terminology, which third occasion companies received’t know. Even higher: by utilizing your individual information, your IP is stored in-house.

- Easy governance, observability, and monitoring: By constructing or finetuning your individual mannequin, you’ll acquire a greater understanding of the outcomes. You’ll know how fashions have been constructed, and on what variations of knowledge. You’ll have a finger on the heartbeat to understand how your fashions are performing, if incoming information is beginning to drift, and if fashions would possibly have to be retrained to enhance accuracy.

“You don’t essentially need to construct off an current mannequin the place the info that you simply’re placing in might be utilized by that firm to compete towards your individual core merchandise.” – Michael Carbin, MIT Professor and Mosaic AI Founding Advisor

STAGES OF EVOLUTION

Prepared to leap in? Let’s have a look at the standard profile of a corporation at every stage of the AI maturity curve when you need to take into consideration advancing to the following stage, and the way Databricks’ Knowledge Intelligence Platform can help you.

Pre-stage: Ingest, remodel, and put together information

The pure place to begin for any AI journey is all the time going to be with information. Corporations usually have huge quantities of knowledge already collected, and the tempo of latest information will increase at an immensely quick tempo. Knowledge generally is a mixture of every kind: from structured transactional information that’s collected in real-time to scanned PDFs that may have are available in by way of the online.

Databricks Lakehouse processes your information workloads to cut back each working prices and complications. Central to this ecosystem is the Unity Catalog, a foundational layer that governs all of your information and AI belongings, making certain seamless integration and administration of inside and exterior information sources, together with Snowflake and MySQL and extra. This enhances the richness and variety of your information ecosystem.

You may usher in close to real-time streaming information by means of Delta Stay Tables to have the ability to take motion on occasions as quickly as doable. ETL workflows could be set as much as run on the appropriate cadence, making certain that your pipelines have wholesome information going by means of from all sources, whereas additionally offering well timed alerts as quickly as something is amiss. This complete strategy to information administration can be essential later, as having the best high quality information, together with exterior datasets, will immediately have an effect on the efficiency of any AI getting used on prime of this information.

Upon getting your information confidently wrangled, it’s time to dip your toes into the world of Generative AI and see how one can create their first proof of idea.

Stage 1: Immediate Engineering

Many corporations nonetheless stay within the foundational phases of adopting Generative AI know-how: they haven’t any overarching AI technique in place, no clear use circumstances to pursue, and no entry to a crew of knowledge scientists and different professionals who might help information the corporate’s AI adoption journey.

If that is like your small business, an excellent place to begin is an off-the-shelf LLM. Whereas these LLMs lack the domain-specific experience of {custom} AI fashions, experimentation might help you plot out your subsequent steps. Your workers can craft specialised prompts and workflows to information their utilization. Your leaders can get a greater understanding of the strengths and weaknesses of those instruments, in addition to a clearer imaginative and prescient of what early success in AI would possibly appear to be. Your group can begin to determine the place to put money into extra highly effective AI instruments and methods that drive extra vital operational acquire.

In case you are able to experiment with exterior fashions, Mannequin Serving supplies a unified platform to handle all fashions in a single place and question them with a single API.

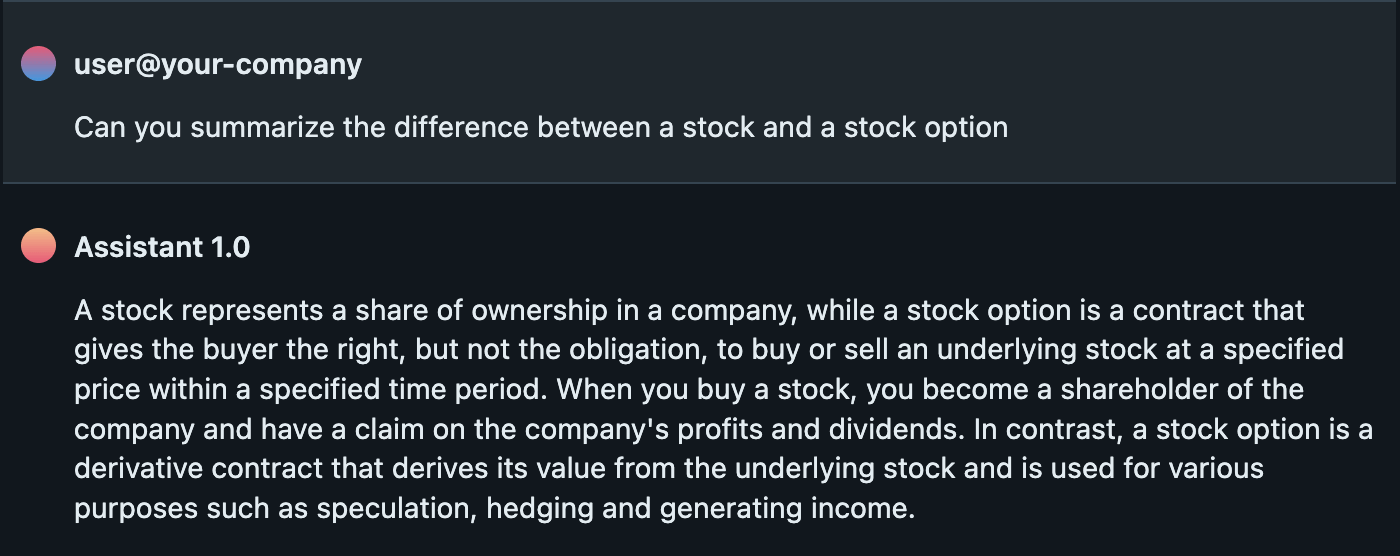

Under is an instance immediate and response for a POC:

Stage 2: Retrieval Augmented Technology

Retrieval Augmented Technology (RAG) enables you to usher in supplemental data assets to make an off-the-shelf AI system smarter. RAG received’t change the underlying habits of the mannequin, however it would enhance the relevancy and accuracy of the responses.

Nonetheless, at this level, your small business shouldn’t be importing its “mission-critical” information. As an alternative, the RAG course of usually includes smaller quantities of non-sensitive info.

For instance, plugging in an worker handbook can allow your employees to begin asking the underlying mannequin questions in regards to the group’s trip coverage. Importing instruction manuals might help energy a service chatbot. With the power to question help tickets utilizing AI, help brokers can get solutions faster; nevertheless, inputting confidential monetary information so workers can inquire in regards to the firm’s efficiency is probably going a step too far.

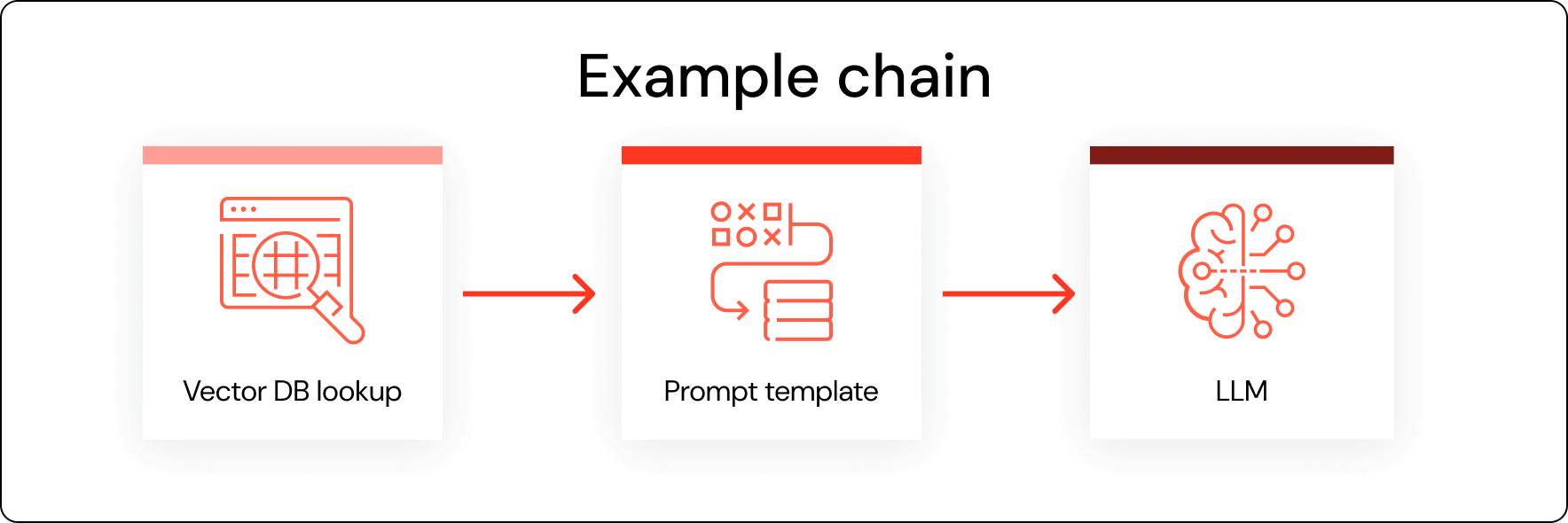

To get began, your crew ought to first consolidate and cleanse the info you propose to make use of. With RAG, it’s important that your organization shops the info in sizes that can be applicable for the downstream fashions. Typically, that requires customers to splice it into smaller segments.

Then, you need to hunt down a software like Databricks Vector Search, which permits customers to rapidly arrange their very own vector database. And since it’s ruled by Unity Catalog, granular controls could be put into place to verify workers are solely accessing the datasets for which they’ve credentials.

Lastly, you possibly can then plug that endpoint right into a business LLM. A software like Databricks MLflow helps to centralize the administration of these APIs.

Among the many advantages of RAG are lowered hallucinations, extra up-to-date and correct responses, and higher domain-specific intelligence. RAG-assisted fashions are additionally a more cost effective strategy for many organizations.

Whereas RAG will assist enhance the outcomes from business fashions, there are nonetheless many limitations to using RAG. If your small business is unable to get the outcomes it needs, it’s time to maneuver on to heavier-weight options, however transferring past RAG-supported fashions usually requires a a lot deeper dedication. The extra customization prices extra and requires much more information.

That’s why it’s key that organizations first construct a core understanding of easy methods to use LLMs. By reaching the efficiency limitations of off-the-shelf fashions earlier than transferring on, you and your management can additional hone in on the place to allocate assets.

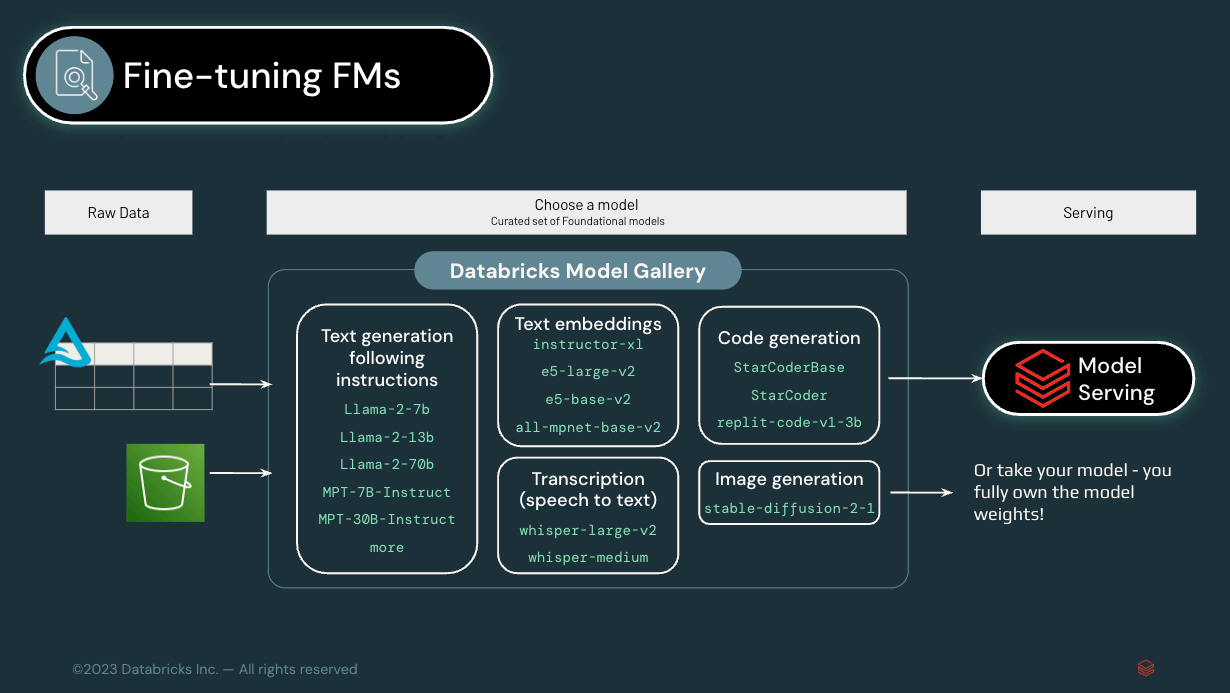

Stage 3: Effective-tuning a Basis Mannequin

Transferring past RAG to mannequin fine-tuning enables you to begin constructing fashions which might be way more deeply customized to the enterprise. When you’ve got already been experimenting with business fashions throughout your operations, you might be probably able to advance to this stage. There’s a transparent understanding on the govt degree of the worth of Generative AI, in addition to an understanding of the restrictions of publicly obtainable LLMs. Particular use circumstances have been established. And now, you and your enterprise are able to go deeper.

With fine-tuning, you possibly can take a general-purpose mannequin and practice it by yourself particular information. For instance, information administration supplier Stardog depends on the Mosaic AI instruments from Databricks to fine-tune the off-the-shelf LLMs it makes use of as a basis for its Information Graph Platform. This allows Stardog’s prospects to question their very own information throughout the completely different silos just by utilizing pure language.

It’s crucial that organizations at this stage have an underlying structure in place that can assist guarantee the info supporting the fashions is safe and correct. Effective-tuning an AI system requires an immense quantity of proprietary info, and as your small business advances on the AI maturity curve, the variety of fashions working will solely develop, growing the demand for information entry.

That’s why it’s worthwhile to have the appropriate mechanisms in place to trace information from the second it is generated to when it is finally used, and why Unity Catalog is such a preferred characteristic amongst Databricks prospects. With its information lineage capabilities, companies all the time know the place information is transferring and who’s accessing it.

Stage 4: Pre-training a mannequin from scratch

In case you are on the stage the place you might be able to pre-train a {custom} mannequin, you’ve reached the apex of the AI maturity curve. Success right here is dependent upon not simply having the appropriate information in the appropriate place, but additionally accessing the mandatory experience and infrastructure. Giant mannequin coaching requires a large quantity of compute and an understanding of the {hardware} and software program complexities of a “hero run.” And past infrastructure and information governance issues, make sure your use case and outcomes are clearly outlined.

Don’t be afraid: whereas these instruments might take funding and time to develop, they will have a transformative impact on your small business. Customized fashions are heavy-duty methods that grow to be the spine of operations or energy a brand new product providing. For instance, software program supplier Replit relied on the Mosaic AI platform to construct its personal LLM to automate code era.

These pre-trained fashions carry out considerably higher than RAG-assisted or fine-tuned fashions. Stanford’s Heart for Analysis on Basis Fashions (working with Mosaic AI) constructed its personal LLM particular to biomedicine. The {custom} mannequin had an accuracy charge of 74.4%, way more correct than the fine-tuned, off-the-shelf mannequin accuracy of 65.2%.

Put up-stage: Operationalizing and LLMOps

Congratulations! You’ve efficiently applied finetuned or pre-trained fashions, and now the ultimate step is to productionalize all of it: an idea known as LLMOps (or LLM Operations).

With LLMOps, contextual information is built-in nightly into vector databases, and AI fashions show distinctive accuracy, self-improving at any time when efficiency drops. This stage additionally affords full transparency throughout departments, offering deep insights into AI mannequin well being and performance.

The function of LLMOps (Giant Language Mannequin Operations) is essential all through this journey, not simply on the peak of AI sophistication. LLMOps needs to be integral from the early phases, not solely on the finish. Whereas GenAI prospects might not initially have interaction in complicated mannequin pre-training, LLMOps ideas are universally related and advantageous. Implementing LLMOps at numerous phases ensures a robust, scalable, environment friendly AI operational framework, democratizing superior AI advantages for any group, no matter their AI maturity ranges could also be.

What does a profitable LLMOps structure appear to be?

The Databricks Knowledge Intelligence Platform exists as the muse to construct your LLMOps processes on prime of. It helps you handle, govern, consider, and monitor fashions and information simply. Listed below are among the advantages it supplies:

- Unified Governance: Unity Catalog permits for unified governance and safety insurance policies throughout information and fashions, streamlining MLOps administration and enabling versatile, level-specific administration in a single answer.

- Learn Entry to Manufacturing Belongings: Knowledge scientists get read-only entry to manufacturing information and AI belongings by means of Unity Catalog, facilitating mannequin coaching, debugging, and comparability, thus enhancing improvement pace and high quality.

- Mannequin Deployment: Using mannequin aliases in Unity Catalog permits focused deployment and workload administration, optimizing mannequin versioning and manufacturing site visitors dealing with.

- Lineage: Unity Catalog’s sturdy lineage monitoring hyperlinks mannequin variations to their coaching information and downstream customers, providing complete impression evaluation and detailed monitoring by way of MLflow.

- Discoverability: Centralizing information and AI belongings in Unity Catalog boosts their discoverability, aiding in environment friendly useful resource location and utilization for MLOps options.

To get a glimpse into what sort of structure can convey ahead this world, we’ve collected a lot of our ideas and experiences into our Large Guide of MLOps, which incorporates a big part on LLMs and covers all the pieces we’ve spoken about right here. If you wish to attain this state of AI nirvana, we extremely advocate looking.

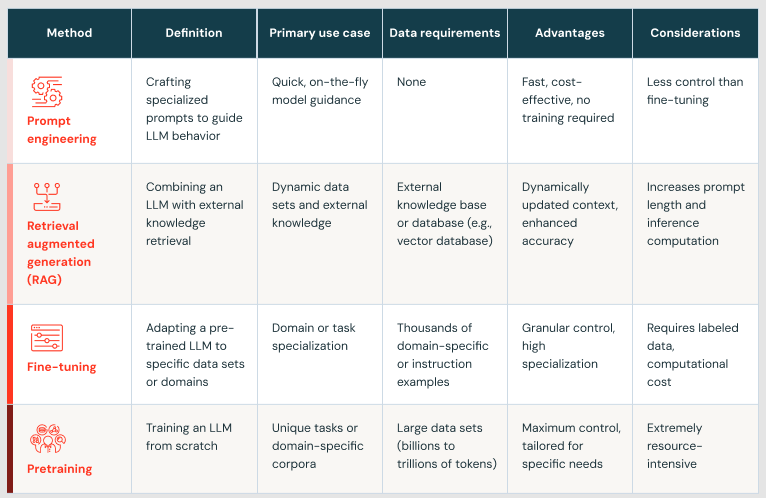

We discovered on this weblog in regards to the a number of phases of maturity with corporations implementing GenAI functions. The desk under provides particulars:

Conclusion

Now that we’ve taken a journey alongside the Generative AI maturity curve and examined the methods wanted to make LLMs helpful to your group, let’s return to the place all of it begins: a Knowledge Intelligence Platform.

A strong Knowledge Intelligence Platform, akin to Databricks, supplies a spine for custom-made AI-powered functions. It affords a knowledge layer that’s each extraordinarily performant at scale and likewise safe and ruled to verify solely the appropriate information will get used. Constructing on prime of the info, a real Knowledge Intelligence Platform may also perceive semantics, which makes using AI assistants way more highly effective because the fashions have entry to your organization’s distinctive information buildings and phrases.

As soon as your AI use circumstances begin being constructed and put into manufacturing, you’ll additionally want a platform that gives distinctive observability and monitoring to verify all the pieces is performing optimally. That is the place a real Knowledge Intelligence platform shines, as it could perceive what your “regular” profiles of knowledge appear to be, and when points might come up.

Finally, an important objective of a Knowledge Intelligence Platform is to bridge the hole between complicated AI fashions and the varied wants of customers, making it doable for a wider vary of people and organizations to leverage the facility of LLMs (and Generative AI) to unravel difficult issues utilizing their very own information.

The Databricks Knowledge Intelligence Platform is the one end-to-end platform that may help enterprises from information ingestion and storage by means of AI mannequin customization, and finally serve GenAI-powered AI functions.