For roboticists, one problem towers above all others: generalization — the flexibility to create machines that may adapt to any surroundings or situation. Because the Nineteen Seventies, the sector has advanced from writing refined packages to utilizing deep studying, instructing robots to be taught instantly from human habits. However a vital bottleneck stays: information high quality. To enhance, robots must encounter situations that push the boundaries of their capabilities, working on the fringe of their mastery. This course of historically requires human oversight, with operators rigorously difficult robots to increase their skills. As robots develop into extra refined, this hands-on method hits a scaling downside: the demand for high-quality coaching information far outpaces people’ skill to offer it.

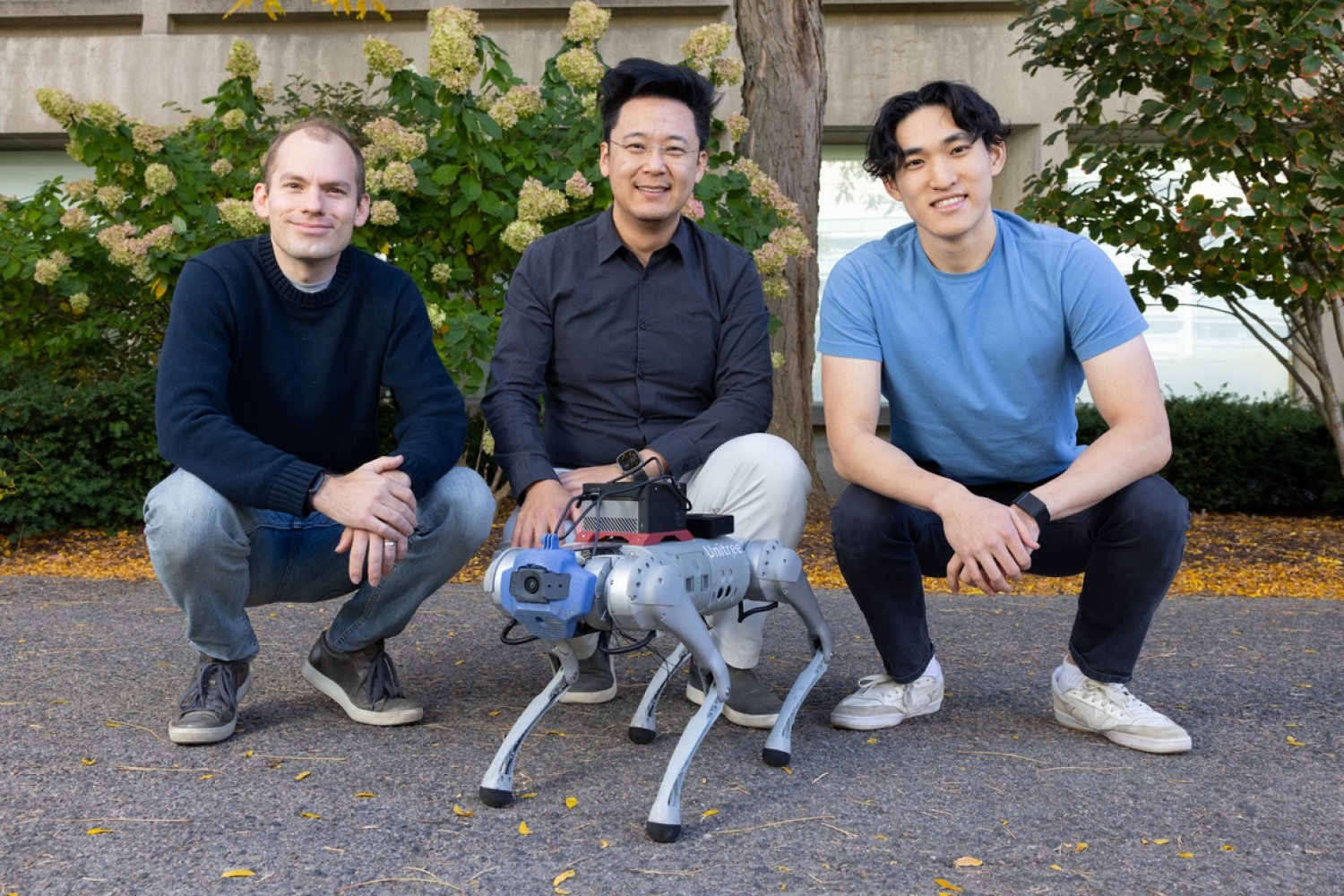

Now, a crew of MIT Pc Science and Synthetic Intelligence Laboratory (CSAIL) researchers has developed a novel method to robotic coaching that might considerably speed up the deployment of adaptable, clever machines in real-world environments. The brand new system, referred to as “LucidSim,” makes use of current advances in generative AI and physics simulators to create numerous and sensible digital coaching environments, serving to robots obtain expert-level efficiency in tough duties with none real-world information.

LucidSim combines physics simulation with generative AI fashions, addressing one of the vital persistent challenges in robotics: transferring abilities realized in simulation to the actual world. “A elementary problem in robotic studying has lengthy been the ‘sim-to-real hole’ — the disparity between simulated coaching environments and the complicated, unpredictable actual world,” says MIT CSAIL postdoc Ge Yang, a lead researcher on LucidSim. “Earlier approaches typically relied on depth sensors, which simplified the issue however missed essential real-world complexities.”

The multipronged system is a mix of various applied sciences. At its core, LucidSim makes use of giant language fashions to generate varied structured descriptions of environments. These descriptions are then reworked into pictures utilizing generative fashions. To make sure that these pictures replicate real-world physics, an underlying physics simulator is used to information the era course of.

The start of an thought: From burritos to breakthroughs

The inspiration for LucidSim got here from an surprising place: a dialog exterior Beantown Taqueria in Cambridge, Massachusetts. “We wished to show vision-equipped robots enhance utilizing human suggestions. However then, we realized we didn’t have a pure vision-based coverage to start with,” says Alan Yu, an undergraduate pupil in electrical engineering and pc science (EECS) at MIT and co-lead creator on LucidSim. “We stored speaking about it as we walked down the road, after which we stopped exterior the taqueria for about half-an-hour. That’s the place we had our second.”

To prepare dinner up their information, the crew generated sensible pictures by extracting depth maps, which give geometric data, and semantic masks, which label completely different elements of a picture, from the simulated scene. They rapidly realized, nonetheless, that with tight management on the composition of the picture content material, the mannequin would produce related pictures that weren’t completely different from one another utilizing the identical immediate. So, they devised a technique to supply numerous textual content prompts from ChatGPT.

This method, nonetheless, solely resulted in a single picture. To make brief, coherent movies that function little “experiences” for the robotic, the scientists hacked collectively some picture magic into one other novel approach the crew created, referred to as “Desires In Movement.” The system computes the actions of every pixel between frames, to warp a single generated picture into a brief, multi-frame video. Desires In Movement does this by contemplating the 3D geometry of the scene and the relative adjustments within the robotic’s perspective.

“We outperform area randomization, a technique developed in 2017 that applies random colours and patterns to things within the surroundings, which remains to be thought-about the go-to technique as of late,” says Yu. “Whereas this method generates numerous information, it lacks realism. LucidSim addresses each variety and realism issues. It’s thrilling that even with out seeing the actual world throughout coaching, the robotic can acknowledge and navigate obstacles in actual environments.”

The crew is especially excited concerning the potential of making use of LucidSim to domains exterior quadruped locomotion and parkour, their most important check mattress. One instance is cellular manipulation, the place a cellular robotic is tasked to deal with objects in an open space; additionally, coloration notion is vital. “In the present day, these robots nonetheless be taught from real-world demonstrations,” says Yang. “Though amassing demonstrations is simple, scaling a real-world robotic teleoperation setup to hundreds of abilities is difficult as a result of a human has to bodily arrange every scene. We hope to make this simpler, thus qualitatively extra scalable, by transferring information assortment right into a digital surroundings.”

Who’s the actual professional?

The crew put LucidSim to the check in opposition to another, the place an professional instructor demonstrates the ability for the robotic to be taught from. The outcomes had been shocking: Robots educated by the professional struggled, succeeding solely 15 p.c of the time — and even quadrupling the quantity of professional coaching information barely moved the needle. However when robots collected their very own coaching information via LucidSim, the story modified dramatically. Simply doubling the dataset dimension catapulted success charges to 88 p.c. “And giving our robotic extra information monotonically improves its efficiency — finally, the coed turns into the professional,” says Yang.

“One of many most important challenges in sim-to-real switch for robotics is attaining visible realism in simulated environments,” says Stanford College assistant professor {of electrical} engineering Shuran Track, who wasn’t concerned within the analysis. “The LucidSim framework supplies a chic resolution through the use of generative fashions to create numerous, extremely sensible visible information for any simulation. This work might considerably speed up the deployment of robots educated in digital environments to real-world duties.”

From the streets of Cambridge to the reducing fringe of robotics analysis, LucidSim is paving the way in which towards a brand new era of clever, adaptable machines — ones that be taught to navigate our complicated world with out ever setting foot in it.

Yu and Yang wrote the paper with 4 fellow CSAIL associates: Ran Choi, an MIT postdoc in mechanical engineering; Yajvan Ravan, an MIT undergraduate in EECS; John Leonard, the Samuel C. Collins Professor of Mechanical and Ocean Engineering within the MIT Division of Mechanical Engineering; and Phillip Isola, an MIT affiliate professor in EECS. Their work was supported, partially, by a Packard Fellowship, a Sloan Analysis Fellowship, the Workplace of Naval Analysis, Singapore’s Defence Science and Know-how Company, Amazon, MIT Lincoln Laboratory, and the Nationwide Science Basis Institute for Synthetic Intelligence and Basic Interactions. The researchers introduced their work on the Convention on Robotic Studying (CoRL) in early November.