At the moment, I’m excited to share just a few product updates we’ve been engaged on associated to real-time Change Knowledge Seize (CDC), together with early entry for in style templates and Third-party CDC platforms. On this put up we’ll spotlight the brand new performance, some examples to assist information groups get began, and why real-time CDC simply grew to become way more accessible.

What Is CDC And Why Is It Helpful?

First, a fast overview of what CDC is and why we’re such huge followers. As a result of all databases make technical tradeoffs, it’s widespread to maneuver information from a supply to a vacation spot primarily based on how the info will probably be used. Broadly talking, there are three primary methods to maneuver information from level A to level B:

- A periodic full dump, i.e. copying all information from supply A to vacation spot B, utterly changing the earlier dump every time.

- Periodic batch updates, i.e. each quarter-hour run a question on A to see which data have modified for the reason that final run (perhaps utilizing modified flag, up to date time, and so forth.), and batch insert these into your vacation spot.

- Incremental updates (aka CDC) – as data change in A, emit a stream of adjustments that may be utilized effectively downstream in B.

CDC leverages streaming to be able to monitor and transport adjustments from one system to a different. This methodology presents just a few monumental benefits over batch updates. First, CDC theoretically permits firms to investigate and react to information in actual time, because it’s generated. It really works with current streaming techniques like Apache Kafka, Amazon Kinesis, and Azure Occasions Hubs, making it simpler than ever to construct a real-time information pipeline.

A Frequent Antipattern: Actual-Time CDC on a Cloud Knowledge Warehouse

One of many extra widespread patterns for CDC is shifting information from a transactional or operational database right into a cloud information warehouse (CDW). This methodology has a handful of drawbacks.

First, most CDWs don’t help in-place updates, which implies as new information arrives they need to allocate and write a wholly new copy of every micropartition by way of the MERGE command, which additionally captures inserts and deletes. The upshot? It’s both costlier (giant, frequent writes) or gradual (much less frequent writes) to make use of a CDW as a CDC vacation spot. Knowledge warehouses had been constructed for batch jobs, so we shouldn’t be stunned by this. However then what are customers to do when real-time use circumstances come up? Madison Schott at Airbyte writes, “I had a necessity for semi real-time information inside Snowflake. After rising information syncs in Airbyte to as soon as each quarter-hour, Snowflake prices skyrocketed. As a result of information was being ingested each quarter-hour, the info warehouse was nearly all the time operating.” In case your prices explode with a sync frequency of quarter-hour, you merely can’t reply to current information, not to mention real-time information.

Time and time once more, firms in all kinds of industries have boosted income, elevated productiveness and reduce prices by making the leap from batch analytics to real-time analytics. Dimona, a number one Latin American attire firm based 55 years in the past in Brazil, had this to say about their stock administration database, “As we introduced extra warehouses and shops on-line, the database began bogging down on the analytics facet. Queries that used to take tens of seconds began taking greater than a minute or timing out altogether….utilizing Amazon’s Database Migration Service (DMS), we now repeatedly replicate information from Aurora into Rockset, which does all the information processing, aggregations and calculations.” Actual-time databases aren’t simply optimized for real-time CDC – they make it attainable and environment friendly for organizations of any dimension. Not like cloud information warehouses, Rockset is function constructed to ingest giant quantities of knowledge in seconds and to execute sub-second queries in opposition to that information.

CDC For Actual-Time Analytics

At Rockset, we’ve seen CDC adoption skyrocket. Groups usually have pipelines that generate CDC deltas and wish a system that may deal with the real-time ingestion of these deltas to allow workloads with low end-to-end latency and excessive question scalability. Rockset was designed for this actual use case. We’ve already constructed CDC-based information connectors for a lot of widespread sources: DynamoDB, MongoDB, and extra. With the brand new CDC help we’re launching at this time, Rockset seamlessly permits real-time CDC coming from dozens of in style sources throughout a number of industry-standard CDC codecs.

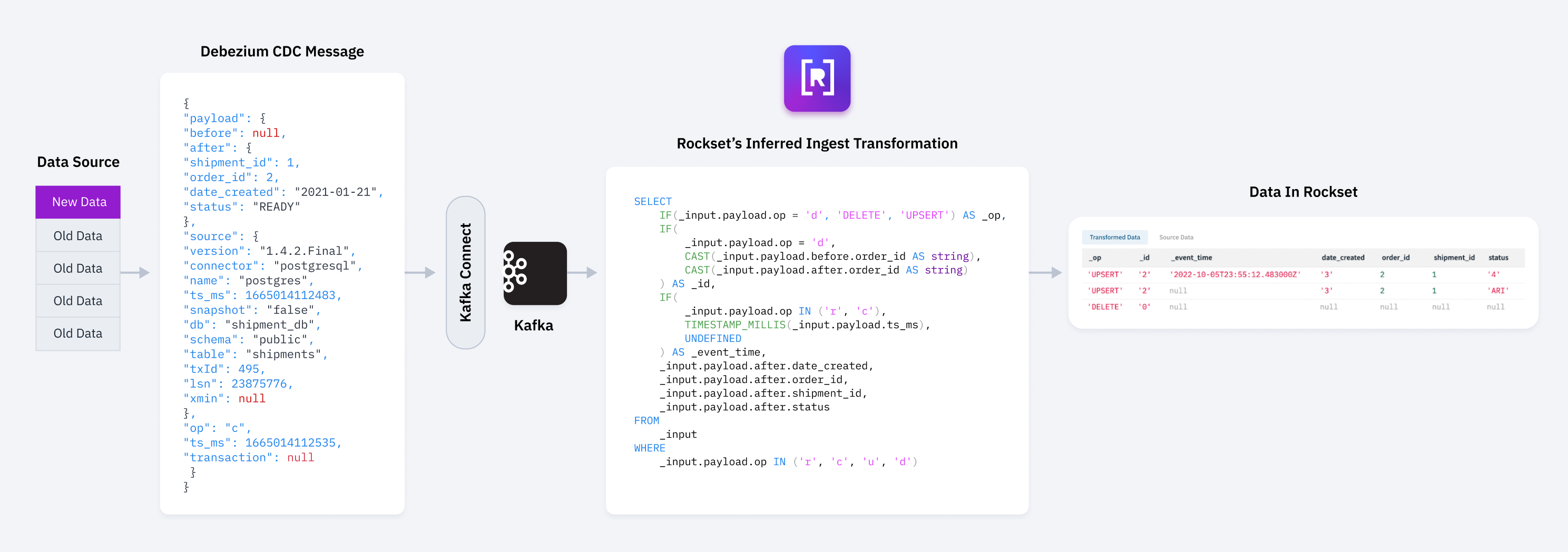

For some background, while you ingest information into Rockset you possibly can specify a SQL question, known as an ingest transformation, that’s evaluated in your supply information. The results of that question is what’s endured to your underlying assortment (the equal of a SQL desk). This offers you the facility of SQL to perform all the things from renaming/dropping/combining fields to filtering out rows primarily based on complicated situations. You possibly can even carry out write-time aggregations (rollups) and configure superior options like information clustering in your assortment.

CDC information usually is available in deeply nested objects with complicated schemas and many information that isn’t required by the vacation spot. With an ingest transformation, you possibly can simply restructure the incoming paperwork, clear up names, and map supply fields to Rockset’s particular fields. This all occurs seamlessly as a part of Rockset’s managed, real-time ingestion platform. In distinction, different techniques require complicated, middleman ETL jobs/pipelines to attain comparable information manipulation, which provides operational complexity, information latency, and price.

You possibly can ingest CDC information from nearly any supply utilizing the facility and suppleness Rockset’s ingest transformations. To take action, there are just a few particular fields it’s worthwhile to populate.

_id

This can be a doc’s distinctive identifier in Rockset. It’s important that the first key out of your supply is correctly mapped to _id in order that updates and deletes for every doc are utilized appropriately. For instance:

-- easy single discipline mapping when `discipline` is already a string

SELECT discipline AS _id;

-- single discipline with casting required since `discipline` is not a string

SELECT CAST(discipline AS string) AS _id;

-- compound main key from supply mapping to _id utilizing SQL perform ID_HASH

SELECT ID_HASH(field1, field2) AS _id;

_event_time

This can be a doc’s timestamp in Rockset. Sometimes, CDC deltas embody timestamps from their supply, which is useful to map to Rockset’s particular discipline for timestamps. For instance:

-- Map supply discipline `ts_epoch` which is ms since epoch to timestamp kind for _event_time

SELECT TIMESTAMP_MILLIS(ts_epoch) AS _event_time

_op

This tells the ingestion platform the way to interpret a brand new document. Most continuously, new paperwork are precisely that – new paperwork – and they are going to be ingested into the underlying assortment. Nonetheless utilizing _op you may also use a doc to encode a delete operation. For instance:

{"_id": "123", "title": "Ari", "metropolis": "San Mateo"} → insert a brand new doc with id 123

{"_id": "123", "_op": "DELETE"} → delete doc with id 123

This flexibility permits customers to map complicated logic from their sources. For instance:

SELECT discipline as _id, IF(kind="delete", 'DELETE', 'UPSERT') AS _op

Try our docs for more information.

Templates and Platforms

Understanding the ideas above makes it potential to convey CDC information into Rockset as-is. Nonetheless, setting up the right transformation on these deeply nested objects and appropriately mapping all of the particular fields can generally be error-prone and cumbersome. To deal with these challenges, we’ve added early-access, native help for quite a lot of ingest transformation templates. These will assist customers extra simply configure the right transformations on prime of CDC information.

By being a part of the ingest transformation, you get the facility and suppleness of Rockset’s information ingestion platform to convey this CDC information from any of our supported sources together with occasion streams, instantly by our write API, and even by information lakes like S3, GCS, and Azure Blob Storage. The complete record of templates and platforms we’re asserting help for consists of the next:

Template Assist

- Debezium: An open supply distributed platform for change information seize.

- AWS Knowledge Migration Service: Amazon’s net service for information migration.

- Confluent Cloud (by way of Debezium): A cloud-native information streaming platform.

- Arcion: An enterprise CDC platform designed for scalability.

- Striim: A unified information integration and streaming platform.

Platform Assist

- Airbyte: An open platform that unifies information pipelines.

- Estuary: An actual-time information operations platform.

- Decodable: A serverless real-time information platform.

In case you’d prefer to request early entry to CDC template help, please electronic mail help@rockset.com.

For example, here’s a templatized message that Rockset helps automated configuration for:

{

"information": {

"ID": "1",

"NAME": "Consumer One"

},

"earlier than": null,

"metadata": {

"TABLENAME": "Worker",

"CommitTimestamp": "12-Dec-2016 19:13:01",

"OperationName": "INSERT"

}

}

And right here is the inferred transformation:

SELECT

IF(

_input.metadata.OperationName="DELETE",

'DELETE',

'UPSERT'

) AS _op,

CAST(_input.information.ID AS string) AS _id,

IF(

_input.metadata.OperationName="INSERT",

PARSE_TIMESTAMP(

'%d-%b-%Y %H:%M:%S',

_input.metadata.CommitTimestamp

),

UNDEFINED

) AS _event_time,

_input.information.ID,

_input.information.NAME

FROM

_input

WHERE

_input.metadata.OperationName IN ('INSERT', 'UPDATE', 'DELETE')

These applied sciences and merchandise permit you to create highly-secure, scalable, real-time information pipelines in simply minutes. Every of those platforms has a built-in connector for Rockset, obviating many guide configuration necessities, reminiscent of these for:

- PostgreSQL

- MySQL

- IBM db2

- Vittes

- Cassandra

From Batch To Actual-Time

CDC has the potential to make real-time analytics attainable. But when your crew or software wants low-latency entry to information, counting on techniques that batch or microbatch information will explode your prices. Actual-time use circumstances are hungry for compute, however the architectures of batch-based techniques are optimized for storage. You’ve now obtained a brand new, completely viable possibility. Change information seize instruments like Airbyte, Striim, Debezium, et al, together with real-time analytics databases like Rockset replicate a wholly new structure, and are lastly in a position to ship on the promise of real-time CDC. These instruments are function constructed for high-performance, low-latency analytics at scale. CDC is versatile, highly effective, and standardized in a means that ensures help for information sources and locations will proceed to develop. Rockset and CDC are an ideal match, decreasing the price of real-time CDC in order that organizations of any dimension can lastly ahead previous batch, and in the direction of real-time analytics.

In case you’d like to provide Rockset + CDC a strive, you can begin a free, two-week trial with $300 in credit right here.