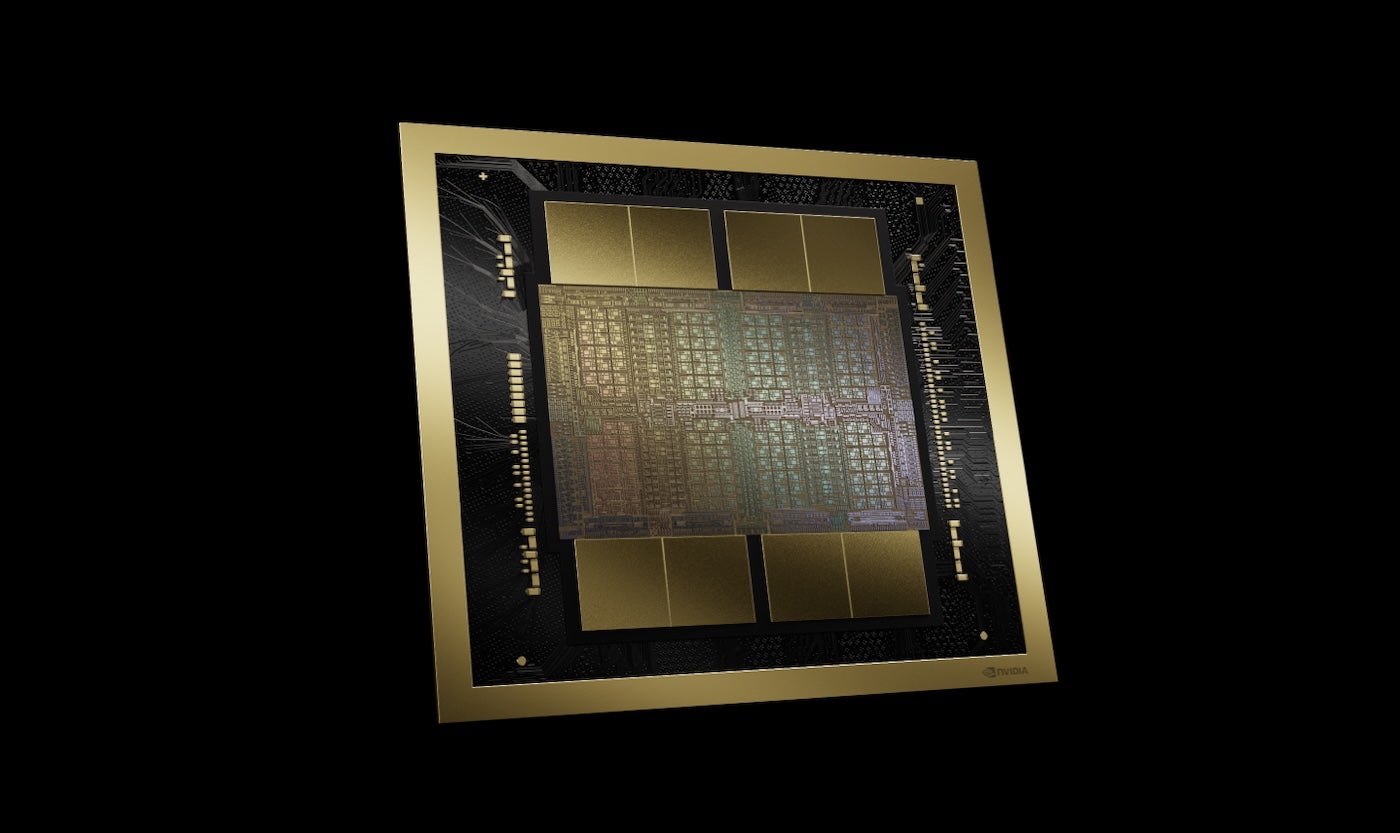

NVIDIA’s latest GPU platform is the Blackwell (Determine A), which corporations together with AWS, Microsoft and Google plan to undertake for generative AI and different trendy computing duties, NVIDIA CEO Jensen Huang introduced through the keynote on the NVIDIA GTC convention on March 18 in San Jose, California.

Determine A

Blackwell-based merchandise will enter the market from NVIDIA companions worldwide in late 2024. Huang introduced an extended lineup of extra applied sciences and companies from NVIDIA and its companions, talking of generative AI as only one aspect of accelerated computing.

“While you develop into accelerated, your infrastructure is CUDA GPUs,” Huang stated, referring to CUDA, NVIDIA’s parallel computing platform and programming mannequin. “And when that occurs, it’s the identical infrastructure as for generative AI.”

Blackwell permits giant language mannequin coaching and inference

The Blackwell GPU platform comprises two dies related by a ten terabytes per second chip-to-chip interconnect, which means either side can work basically as if “the 2 dies suppose it’s one chip,” Huang stated. It has 208 billion transistors and is manufactured utilizing NVIDIA’s 208 billion 4NP TSMC course of. It boasts 8 TB/S reminiscence bandwidth and 20 pentaFLOPS of AI efficiency.

For enterprise, this implies Blackwell can carry out coaching and inference for AI fashions scaling as much as 10 trillion parameters, NVIDIA stated.

Blackwell is enhanced by the next applied sciences:

- The second technology of the TensorRT-LLM and NeMo Megatron, each from NVIDIA.

- Frameworks for double the compute and mannequin sizes in comparison with the primary technology transformer engine.

- Confidential computing with native interface encryption protocols for privateness and safety.

- A devoted decompression engine for accelerating database queries in knowledge analytics and knowledge science.

Concerning safety, Huang stated the reliability engine “does a self take a look at, an in-system take a look at, of each little bit of reminiscence on the Blackwell chip and all of the reminiscence connected to it. It’s as if we shipped the Blackwell chip with its personal tester.”

Blackwell-based merchandise will likely be obtainable from companion cloud service suppliers, NVIDIA Cloud Associate program corporations and choose sovereign clouds.

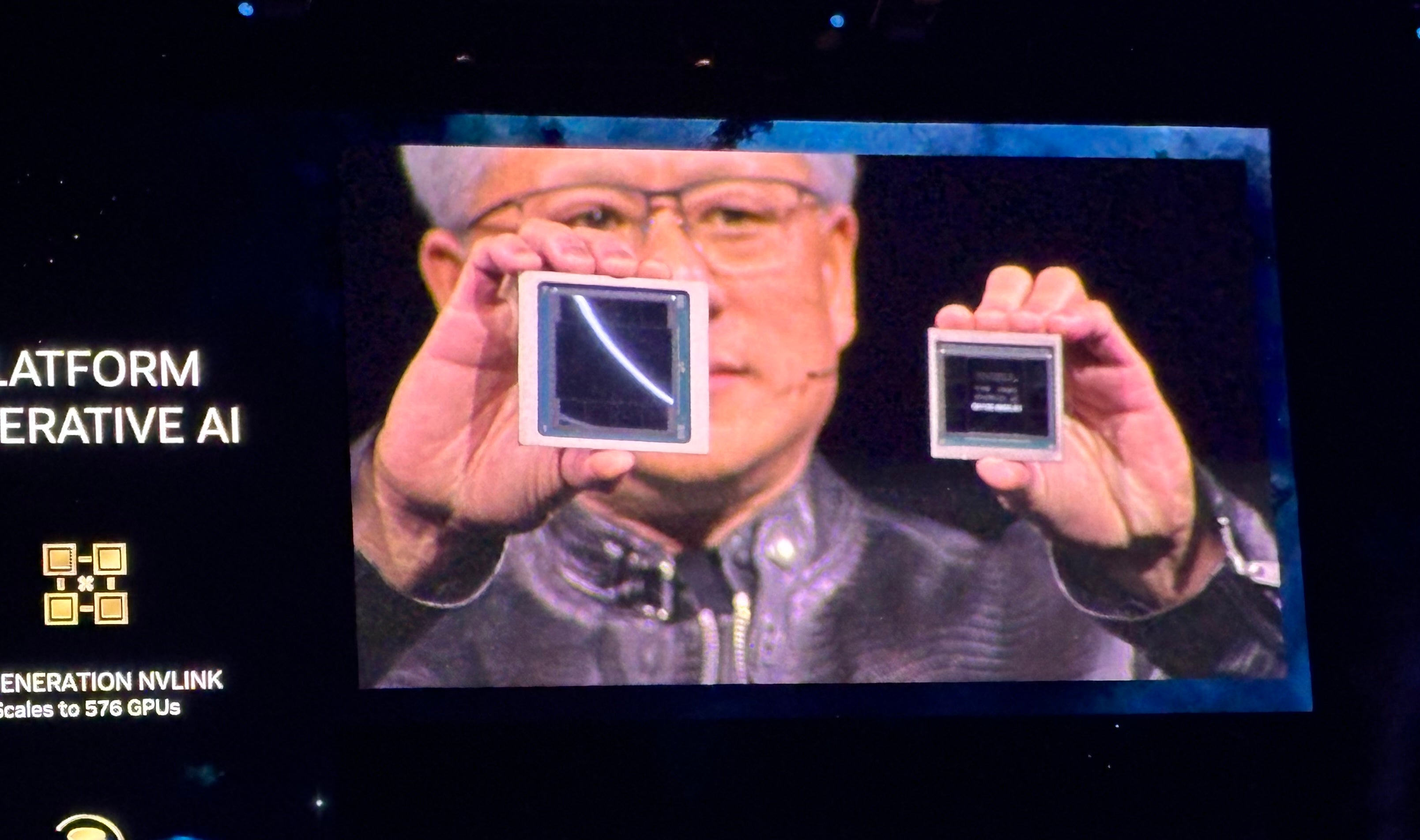

The Blackwell line of GPUs follows the Grace Hopper line of GPUs, which debuted in 2022 (Determine B). NVIDIA says Blackwell will run real-time generative AI on trillion-parameter LLMs at 25x much less value and fewer power consumption than the Hopper line.

Determine B

NVIDIA GB200 Grace Blackwell Superchip connects a number of Blackwell GPUs

Together with the Blackwell GPUs, the corporate introduced the NVIDIA GB200 Grace Blackwell Superchip, which hyperlinks two NVIDIA B200 Tensor Core GPUs to the NVIDIA Grace CPU – offering a brand new, mixed platform for LLM inference. The NVIDIA GB200 Grace Blackwell Superchip could be linked with the corporate’s newly-announced NVIDIA Quantum-X800 InfiniBand and Spectrum-X800 Ethernet platforms for speeds as much as 800 GB/S.

The GB200 will likely be obtainable on NVIDIA DGX Cloud and thru AWS, Google Cloud and Oracle Cloud Infrastructure cases later this 12 months.

New server design appears to be like forward to trillion-parameter AI fashions

The GB200 is one part of the newly introduced GB200 NVL72, a rack-scale server design that packages collectively 36 Grace CPUs and 72 Blackwell GPUs for 1.8 exaFLOPs of AI efficiency. NVIDIA is waiting for potential use instances for large, trillion-parameter LLMs, together with persistent reminiscence of conversations, advanced scientific purposes and multimodal fashions.

The GB200 NVL72 combines the fifth-generation of NVLink connectors (5,000 NVLink cables) and the GB200 Grace Blackwell Superchip for an enormous quantity of compute energy Huang calls “an exoflops AI system in a single single rack.”

“That’s greater than the common bandwidth of the web … we might principally ship every little thing to all people,” Huang stated.

“Our purpose is to repeatedly drive down the fee and power – they’re straight correlated with one another – of the computing,” Huang stated.

Cooling the GB200 NVL72 requires two liters of water per second.

The subsequent technology of NVLink brings accelerated knowledge heart structure

The fifth-generation of NVLink gives 1.8TB/s bidirectional throughput per GPU communication amongst as much as 576 GPUs. This iteration of NVLink is meant for use for essentially the most highly effective advanced LLMs obtainable at the moment.

“Sooner or later, knowledge facilities are going to be considered an AI manufacturing facility,” Huang stated.

Introducing the NVIDIA Inference Microservices

One other component of the potential “AI manufacturing facility” is the NVIDIA Inference Microservice, or NIM, which Huang described as “a brand new manner so that you can obtain and package deal software program.”

NVIDIA’s NIMs are microservices containing the APIs, domain-specific code, optimized inference engines and enterprise runtime wanted to run generative AI. These cloud-native microservices could be optimized to the variety of GPUs the shopper makes use of, and could be run within the cloud or in an owned knowledge heart. NIMs let builders use APIs, NVIDIA CUDA and Kubernetes in a single package deal.

SEE: Python stays the preferred programming language in line with the TIOBE Index. (TechRepublic)

NIMs harness AI for constructing AI, streamlining a number of the heavy-duty work reminiscent of inference and coaching required to construct chatbots. By domain-specific CUDA libraries, NIMs could be custom-made to extremely particular industries reminiscent of healthcare.

As an alternative of writing code to program an AI, Huang stated, builders can “assemble a staff of AIs” that work on the method contained in the NIM.

“We wish to construct chatbots – AI copilots – that work alongside our designers,” Huang stated.

NIMs can be found beginning March 18. Builders can experiment with NIMs for no cost and run them by means of a NVIDIA AI Enterprise 5.0 subscription. NIMs can be found in Amazon SageMaker, Google Kubernetes Engine and Microsoft Azure AI, and might interoperate with AI frameworks Deepset, LangChain and LlamaIndex.

New instruments launched for NVIDIA AI Enterprise in model 5.0

NVIDIA launched the 5.0 model of AI Enterprise, its AI deployment platform meant to assist organizations deploy generative AI merchandise to its clients. 5.0 of NVIDIA AI Enterprise provides the next:

- NIMs.

- CUDA-X microservices for all kinds of GPU-accelerated AI use instances.

- AI Workbench, a developer toolkit.

- Help for Purple Hat OpenStack Platform.

- Expanded assist for brand new NVIDIA GPUs, networking {hardware} and virtualization software program.

NVIDIA’s retrieval-augmented technology giant language mannequin operator is in early entry for AI Enterprise 5.0 now.

AI Enterprise 5.0 is on the market by means of Cisco, Dell Applied sciences, HP, HPE, Lenovo, Supermicro and different suppliers.

Different main bulletins from NVIDIA at GTC 2024

Huang introduced a variety of latest services throughout accelerated computing and generative AI through the NVIDIA GTC 2024 keynote.

NVIDIA introduced cuPQC, a library used to speed up post-quantum cryptography. Builders engaged on post-quantum cryptography can attain out to NVIDIA for updates about availability.

NVIDIA’s X800 sequence of community switches accelerates AI infrastructure. Particularly, the X800 sequence comprises the NVIDIA Quantum-X800 InfiniBand or NVIDIA Spectrum-X800 Ethernet switches, the NVIDIA Quantum Q3400 change and the NVIDIA ConnectXR-8 SuperNIC. The X800 switches will likely be obtainable in 2025.

Main partnerships detailed through the NVIDIA’s keynote embody:

- NVIDIA’s full-stack AI platform will likely be on Oracle’s Enterprise AI beginning March 18.

- AWS will present entry to NVIDIA Grace Blackwell GPU-based Amazon EC2 cases and NVIDIA DGX Cloud with Blackwell safety.

- NVIDIA will speed up Google Cloud with the NVIDIA Grace Blackwell AI computing platform and the NVIDIA DGX Cloud service, coming to Google Cloud. Google has not but confirmed an availability date, though it’s prone to be late 2024. As well as, the NVIDIA H100-powered DGX Cloud platform is mostly obtainable on Google Cloud as of March 18.

- Oracle will use the NVIDIA Grace Blackwell in its OCI Supercluster, OCI Compute and NVIDIA DGX Cloud on Oracle Cloud Infrastructure. Some mixed Oracle-NVIDIA sovereign AI companies can be found as of March 18.

- Microsoft will undertake the NVIDIA Grace Blackwell Superchip to speed up Azure. Availability could be anticipated later in 2024.

- Dell will use NVIDIA’s AI infrastructure and software program suite to create Dell AI Manufacturing facility, an end-to-end AI enterprise resolution, obtainable as of March 18 by means of conventional channels and Dell APEX. At an undisclosed time sooner or later, Dell will use the NVIDIA Grace Blackwell Superchip as the premise for a rack scale, high-density, liquid-cooled structure. The Superchip will likely be appropriate with Dell’s PowerEdge servers.

- SAP will add NVIDIA retrieval-augmented technology capabilities into its Joule copilot. Plus, SAP will use NVIDIA NIMs and different joint companies.

“The entire trade is gearing up for Blackwell,” Huang stated.

Rivals to NVIDIA’s AI chips

NVIDIA competes primarily with AMD and Intel with reference to offering enterprise AI. Qualcomm, SambaNova, Groq and all kinds of cloud service suppliers play in the identical area relating to generative AI inference and coaching.

AWS has its proprietary inference and coaching platforms: Inferentia and Trainium. In addition to partnering with NVIDIA on all kinds of merchandise, Microsoft has its personal AI coaching and inference chip: the Maia 100 AI Accelerator in Azure.

Disclaimer: NVIDIA paid for my airfare, lodging and a few meals for the NVIDIA GTC occasion held March 18 – 21 in San Jose, California.