Nvidia’s newest and quickest GPU, code-named Blackwell, is right here and can underpin the corporate’s AI plans this yr. The chip provides efficiency enhancements from its predecessors, together with the red-hot H100 and A100 GPUs. Clients demand extra AI efficiency, and the GPUs are primed to succeed with pent up demand for increased performing GPUs.

The GPU can prepare 1 trillion parameter fashions, mentioned Ian Buck, vice chairman of high-performance and hyperscale computing at Nvidia, in a press briefing.

Programs with as much as 576 Blackwell GPUs could be paired as much as prepare multi-trillion parameter fashions.

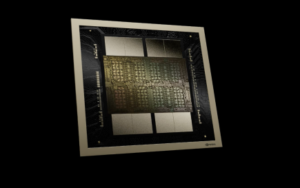

The GPU has 208 billion transistors and was made utilizing TSMC’s 4-nanometer course of. That’s about 2.5 occasions extra transistors than the predecessor H100 GPU, which is the primary clue to important efficiency enhancements.

AI is a memory-intensive course of, and information must be briefly saved in RAM. The GPU has 192GB of HBM3E reminiscence, the identical as final yr’s H200 GPU.

Nvidia is specializing in scaling the variety of Blackwell GPUs to tackle bigger AI jobs. “This may develop AI information heart scale past 100,000 GPU,” Buck mentioned.

The GPU supplies “20 petaflops of AI efficiency on a single GPU,” Buck mentioned.

Buck offered fuzzy efficiency numbers designed to impress, and real-world efficiency numbers had been unavailable. Nonetheless, it’s seemingly that Nvidia used FP4 – a brand new information sort with Blackwell – to measure efficiency and attain the 20-petaflop efficiency quantity.

The predecessor H100 offered 4 teraflops of efficiency for the FP8 information sort and about 2 petaflops of efficiency for FP16.

“It delivers 4 occasions the coaching efficiency of Hopper, 30 occasions the inference efficiency general, and 25 occasions higher vitality effectivity,” Buck mentioned.

The FP4 information sort is for inferencing and can enable for the quickest computing of smaller packages of knowledge and ship the outcomes again a lot sooner. The consequence? Sooner AI efficiency however much less precision. FP64 and FP32 present extra precision computing however aren’t designed for AI.

The GPU consists of two dies packaged collectively. They convey by way of an interface referred to as NV-HBI, which transfers data at 10 terabytes per second. Blackwell’s 192GB of HBM3E reminiscence is supported by 8 TB/sec of reminiscence bandwidth.

The Programs

Nvidia has additionally created programs with Blackwell GPUs and Grace CPUs. First, It created the GB200 superchip, which pairs two Blackwell GPUs to its Grace CPU. Second, the corporate created a full rack system referred to as the GB200 NVL72 system with liquid cooling—it has 36 GB200 Superchips and 72 GPUs interconnected in a grid format.

The GB200 NVL72 system delivers 720 petaflops of coaching efficiency and 1.4 exaflops of inferencing efficiency. It might probably help 27-trillion parameter mannequin sizes. The GPUs are interconnected by way of a brand new NVLink interconnect, which has a bandwidth of 1.8TB/s.

The GB200 NVL72 will probably be coming this yr to cloud suppliers that embody Google Cloud and Oracle cloud. It would even be out there by way of Microsoft’s Azure and AWS.

Nvidia is constructing an AI supercomputer with AWS referred to as Venture Ceiba, which might ship 400 exaflops of AI efficiency.

“We’ve now upgraded it to be Grace-Blackwell, supporting….20,000 GPUs and can now ship over 400 exaflops of AI,” Buck mentioned, including that the system will probably be reside later this yr.

Nvidia additionally introduced an AI supercomputer referred to as DGX SuperPOD, which has eight GB200 programs — or 576 GPUs — which might ship 11.5 exaflops of FP4 AI efficiency. The GB200 programs could be related by way of the NVLink interconnect, which might maintain excessive speeds over a brief distance.

Moreover, the DGX SuperPOD can hyperlink up tens of 1000’s of GPUs with the Nvidia Quantum InfiniBand networking stack. This networking bandwidth is 1,800 gigabytes per second.

Nvidia additionally launched one other system referred to as DGX B200, which incorporates Intel’s fifth Gen Xeon chips referred to as Emerald Rapids. The system pairs eight B200 GPUs with two Emerald Rapids chips. It can be designed into x86-based SuperPod programs. The programs can present as much as 144 petaflops of AI efficiency and embody 1.4TB of GPU reminiscence and 64TB/s of reminiscence bandwidth.

The DGX programs will probably be out there later this yr.

Predictive Upkeep

The Blackwell GPUs and DGX programs have predictive upkeep options to stay in prime form, mentioned Charlie Boyle, vice chairman of DGX programs at Nvidia, in an interview with HPCwire.

“We’re monitoring 1000s of factors of knowledge each second to see how the job can get optimally executed,” Boyle mentioned.

The predictive upkeep options are just like RAS (reliability, availability, and serviceability) options in servers. It’s a mixture of {hardware} and software program RAS options within the programs and GPUs.

“There are particular new … options within the chip to assist us predict issues which might be happening. This function isn’t wanting on the path of knowledge coming off of all these GPUs,” Boyle mentioned.

Nvidia can also be implementing AI options for predictive upkeep.

“We’ve a predictive upkeep AI that we run on the cluster stage so we see which nodes are wholesome, which nodes aren’t,” Boyle mentioned.

If the job dies, the function helps decrease restart time. “On a really massive job that used to take minutes, doubtlessly hours, we’re attempting to get that right down to seconds,” Boyle mentioned.

Software program Updates

Nvidia additionally introduced AI Enterprise 5.0, which is the overarching software program platform that harnesses the velocity and efficiency of the Blackwell GPUs.

As Datanami beforehand reported, the NIM software program consists of new instruments for builders, together with a co-pilot to make the software program simpler to make use of. Nvidia is attempting to direct builders to write down purposes in CUDA, the corporate’s proprietary improvement platform.

The software program prices $4,500 per GPU per yr or $1 per GPU per hour.

A function referred to as NVIDIA NIM is a runtime that may automate the deployment of AI fashions. The purpose is to make it sooner and simpler to run AI in organizations.

“Simply let Nvidia do the work to provide these fashions for them in probably the most environment friendly enterprise-grade method in order that they will do the remainder of their work,” mentioned Manuvir Das, vice chairman for enterprise computing at Nvidia, throughout the press briefing.

NIM is extra like a copilot for builders, serving to them with coding, discovering options, and utilizing different instruments to deploy AI extra simply. It is likely one of the many new microservices that the corporate has added to the software program package deal.