Machine studying fashions have been working for a very long time on a single information mode or unimodal mode. This concerned textual content for translation and language modeling, photographs for object detection and picture classification, and audio for speech recognition.

Nevertheless, it is a well-known proven fact that human intelligence isn’t restricted to a single information modality as human beings are able to studying in addition to writing textual content. People are able to seeing photographs and watching movies. They are often looking out for unusual noises to detect hazard and take heed to music on the identical time for leisure. Therefore, working with multimodal information is critical for each people and synthetic intelligence (AI) to perform in the actual world.

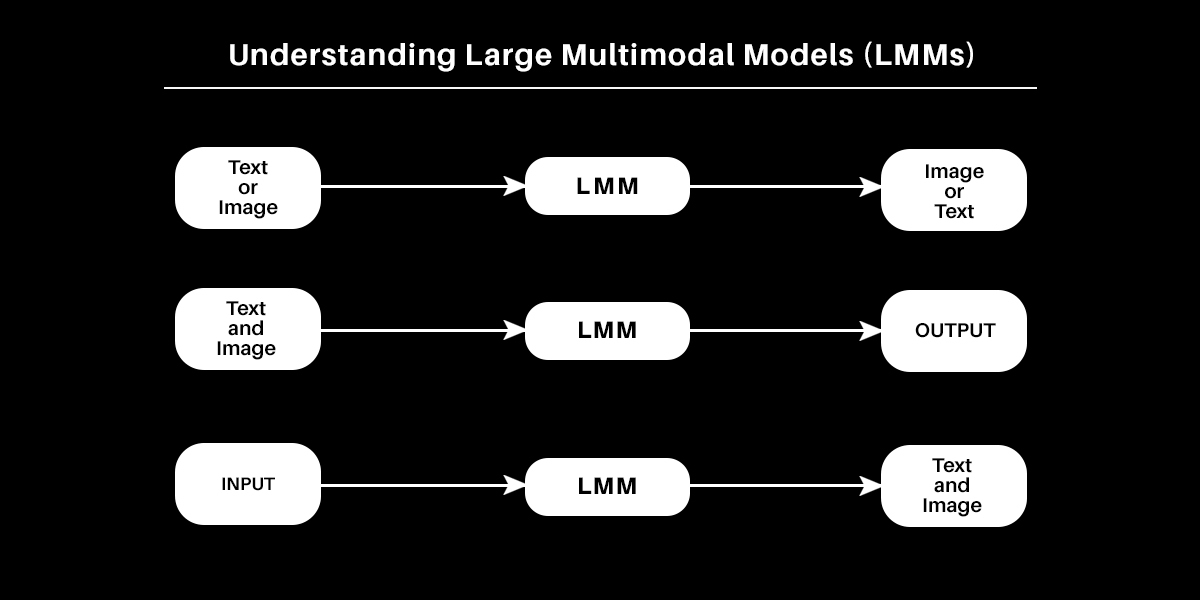

A serious headway in AI analysis and growth is most likely the incorporation of further modalities like picture inputs into massive language fashions (LLMs) ensuing within the creation of huge multimodal fashions (LMMs). Now, one wants to grasp what precisely LMMs are as each multimodal system isn’t a

LMM. Multimodal may be any one of many following:

1. Enter and output comprise of various modalities (textual content to picture or picture to textual content).

2. Inputs are multimodal (each textual content and pictures may be processed).

3. Outputs are multimodal (a system can produce textual content in addition to photographs).

Use Instances for Massive Multimodal Fashions

LMMs supply a versatile interface for interplay permitting one to work together with them in the absolute best method. It allows one to question by merely typing, speaking, or pointing their digital camera at one thing. A selected use case value mentioning right here includes enabling blind folks to browse the Web. A number of use instances should not attainable with out multimodality. These embrace industries dealing with a mixture of information modalities like healthcare, robotics, e-commerce, retail, gaming, and so on. Additionally, bringing information from different modalities can help in boosting the efficiency of the mannequin.

Although multimodal AI is not one thing new, it’s gathering momentum. It has super potential for remodeling human-like capabilities by way of growth in laptop imaginative and prescient and pure language processing. LMM is far nearer to imitating human notion than ever earlier than.

Given the expertise remains to be in its main stage, it’s nonetheless higher when in comparison with people in a number of assessments. There are a number of fascinating functions of multimodal AI other than simply context recognition. Multimodal AI assists with enterprise planning and makes use of machine studying algorithms since it may possibly acknowledge varied varieties of knowledge and provides a lot better and extra knowledgeable insights.

The mixture of knowledge from totally different streams allows it to make predictions relating to an organization’s monetary outcomes and upkeep necessities. In case of previous tools not receiving the specified consideration, a multimodal AI can deduce that it would not require servicing ceaselessly.

A multimodal method can be utilized by AI to acknowledge varied varieties of knowledge. As an illustration, an individual might perceive a picture by way of a picture, whereas one other by way of a video or a music. Varied sorts of languages can be acknowledged which might show to be very useful.

A mix of picture and sound can allow a human to explain an object in a fashion that a pc can not. Multimodal AI can help in limiting that hole. Together with laptop imaginative and prescient, multimodal programs can be taught from varied varieties of knowledge. They will make selections by recognizing texts and pictures from a visible picture. They will additionally find out about them from context.

Summing up, a number of analysis initiatives have investigated multimodal studying enabling AI to be taught from varied varieties of knowledge enabling machines to understand a human’s message. Earlier a number of organizations had concentrated their efforts on increasing their unimodal programs, however, the current growth of multimodal functions has opened doorways for chip distributors and platform firms.

Multimodal programs can resolve points which are frequent with conventional machine studying programs. As an illustration, it may possibly incorporate textual content and pictures together with audio and video. The preliminary step right here includes aligning the inner illustration of the mannequin throughout modalities.

Many organizations have embraced this expertise. LMM framework derives its success based mostly on language, audio, and imaginative and prescient networks. It will possibly resolve points in each area on the identical time by combining these applied sciences. For instance, Google Translate makes use of a multimodal neural community for translations which is a step within the course of speech integration, language, and imaginative and prescient understanding into one community.

The put up From Massive Language Fashions to Massive Multimodal Fashions appeared first on Datafloq.