The appearance of generative AI has supersized the urge for food for GPUs and different types of accelerated computing. To assist firms scale up their accelerated compute investments in a predictable method, GPU large Nvidia and a number of other server companions have borrowed a web page from the world of excessive efficiency computing (HPC) and unveiled the Enterprise Reference Architectures (ERA).

Giant language fashions (LLMs) and different basis fashions have triggered a Gold Rush for GPUs, and Nvidia has been arguably the largest beneficiary. In 2023, the corporate shipped 3.76 million information middle GPU items, greater than 1 million greater than 2022. That progress hasn’t eased up in 2024, as firms proceed to scramble for GPUs to energy GenAI, which has pushed Nvidia to turn out to be probably the most helpful firm on this planet, with a market capitalization of $3.75 trillion.

Nvidia launched its ERA program right now towards this backdrop of a mad scramble to scale up compute to construct and serve GenAI functions. The corporate’s purpose with ERA is to offer a blueprint to assist prospects scale up their HPC compute infrastructure in a predictable and repeatable method that minimizes danger and maximizes outcomes.

Nvidia says the ERA program will speed up time to marketplace for server makers whereas boosting efficiency, scalability, and manageability. This strategy additionally bolsters safety, Nvidia says, whereas lowering complexity. To this point, Nvidia has ERA agreements in place for Dell Applied sciences, Hewlett Packard Enterprise, Lenovo, and Supermicro, with extra server makers anticipated to affix this system.

“By bringing the identical technical parts from the supercomputing world and packaging them with design suggestions based mostly on many years of expertise,” the corporate says in a white paper on ERA, “Nvidia’s purpose is to remove the burden of constructing these programs from scratch with a streamlined strategy for versatile and cost-effective configurations, taking the guesswork and danger out of deployment.”

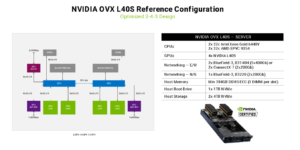

One of many Nvidia ERA reference configurations (Supply: Nvidia ERA Overview whitepaper)

The ERA strategy leverages licensed server configurations of GPUs, CPUs, and community interface playing cards (NICs) that Nvidia says are “examined and validated to ship efficiency at scale.” This contains the Nvidia Spectrum-X AI Ethernet platform, Nvidia BlueField-3 DPUs, amongst others.

The ERA is tailor-made towards large-scale deployments that vary from 4 to 128 nodes, containing wherever from 32 to 1,024 GPUs, in response to Nvidia’s white paper. That is candy spot the place the corporate sees firms turning their information facilities into “AI factories.” It’s additionally a bit smaller than the corporate’s present NCP Reference Structure, which is designed for larger-scale foundational mannequin coaching beginning with a minimal of 128 nodes and scaling as much as 100,000 GPUs.

ERA requires a number of completely different design patterns, relying on the scale of the cluster. As an example, there’s Nvidia’s “2-4-3” strategy, which features a 2U compute node that comprises as much as 4 GPUs, as much as three NICs, and two CPUs. Nvidia says this could work on clusters starting from eight to 96 nodes. Alternatively, there’s the 2-8-5 design sample, which requires 4U nodes outfitted with as much as eight GPUs, 5 NICs, and two CPUs. This sample scales from 4 as much as 64 nodes in a cluster, Nvidia says.

Partnering with server makers on confirmed architectures for accelerated compute helps to maneuver prospects towards their purpose of constructing AI factories in a quick and safe method, in response to Nvidia.

“The transformation of conventional information facilities into AI Factories is revolutionizing how enterprises course of and analyze information by integrating superior computing and networking applied sciences to fulfill the substantial computational calls for of AI functions,” the corporate says in its white paper.

Associated Gadgets:

NVIDIA Is More and more the Secret Sauce in AI Deployments, However You Nonetheless Want Expertise

Nvidia Introduces New Blackwell GPU for Trillion-Parameter AI Fashions